Many PC components and peripherals can be overclocked, allowing them to operate beyond their factory settings. It's more common for CPUs and graphics cards, but overclocking gaming monitors is also pretty common. Sometimes this is done at the factory, as displays sold at a 144Hz refresh rate often use panels with a 120Hz native refresh rate.

It's also a way for users to delay upgrades or allocate their budget to other components, as many monitors can be overclocked to achieve higher performance. This has been a thing since early CRT monitors, but your mileage varied depending on many things, including the quality of internal components, the quality of the display itself, and the quality of any cooling solutions.

As the practice of monitoring overclocking has been around for ages, a mythology of sorts has developed, with some incorrect advice being presented as universally accepted truths. It's time for some mythbusting, dispelling the notion that these practices are safe on modern monitors. Just like CPU and GPU overclocking, monitors generally come from the factory at the limits of what the display can produce, and trying to overclock them can cause irreparable damage.

4 It's generally safe if you gradually increase the frequency

Damage can come at any point, and depends on many other factors

The most common myth about monitor overclocking is that it is safe if you take it slowly and increase the Hz in small increments. For well-made monitors with good components, adequate cooling, and non-glitchy firmware, that might be true, but there's an element of survivor bias in this myth.

The truth is, even small increments can damage some monitors, especially those with budget components or that are already operating near their limits. This could be immediate damage or a slow creep over time, but either way, your monitor is going to meet a premature end.

If your monitor is high-end, the components might be better, but the factory has probably already overclocked things to the high refresh rate it comes with, and the gains will be marginal, if any. At the other end of the scale, you might be tempted to get a budget monitor and overclock it. Still, if you're buying on a budget, you already don't have money to waste, and performing potentially damaging procedures on your monitor seems like a bigger risk.

Related

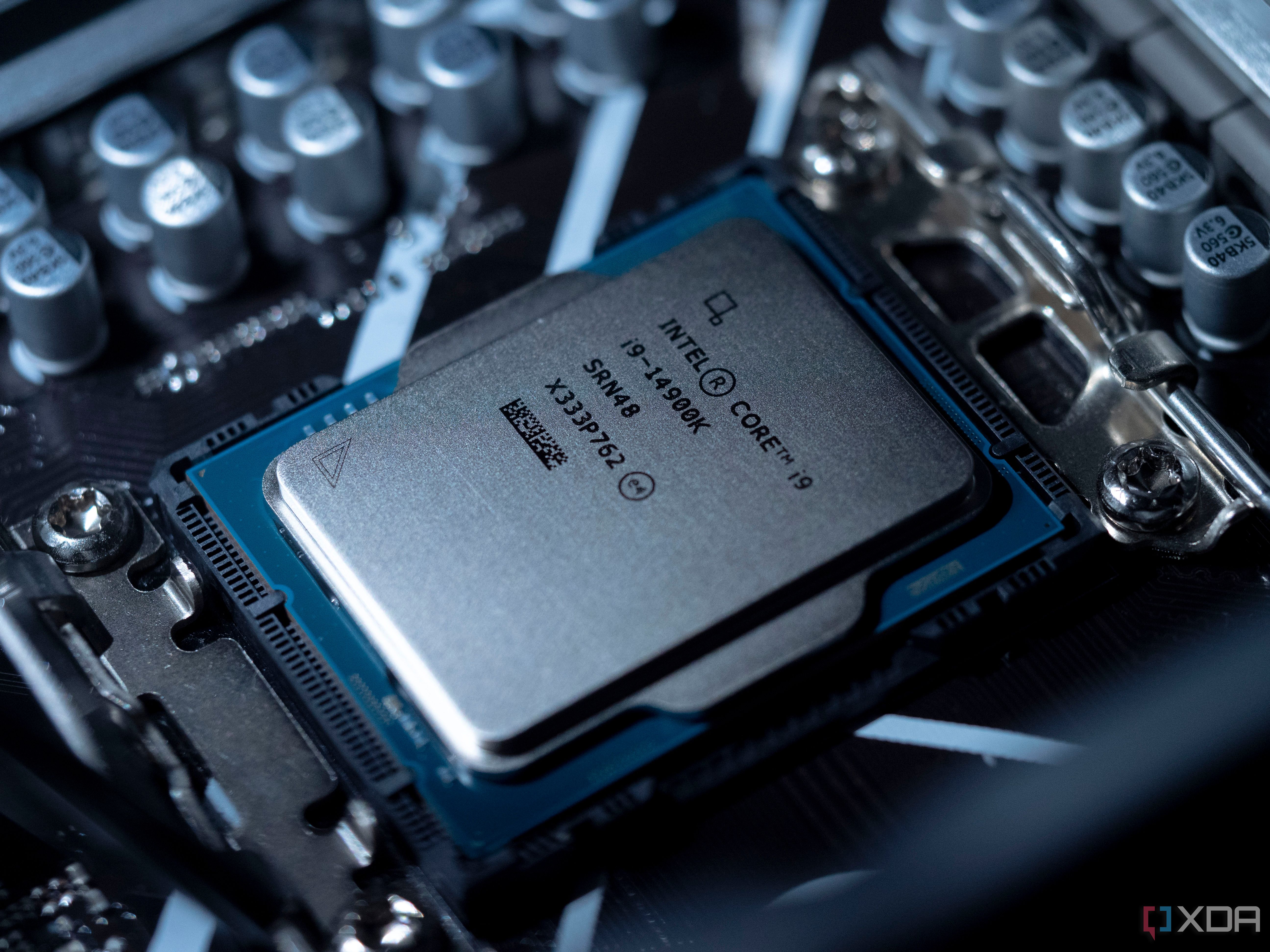

How to safely overclock your CPU: A beginners guide to unlock more performance

If you want to get into overclocking, whether for your Intel or AMD CPU, here's where to start.

3 You always get a warning before permanent damage

Visual glitches can come after damage has been done, not before

While overclocking your monitor to higher refresh rates, once you get past the limit of the scalar, or the panel, or the main board, you're likely to get visual glitches like static or color distortion, or parts of the image that won't render correctly. That's if you're lucky, because some monitor panels will fail when they get past their limits, giving you a black screen and no other warning to dial things back down.

Back in the early days of digital displays, I spent hours tweaking 60Hz IPS panels to see if they could achieve scores anywhere near the 120Hz+ marks I'd seen on imported Korean monitors. I failed, miserably, because the panel I was using wasn't of sufficient quality, and ended up breaking a large section of the screen that didn't revert to normal when I removed the custom settings. Thankfully, I was able to return it for a new one, but if not, I would have had to shop for another expensive display.

Related

How to overclock your GPU: A beginner's guide

Overclocking has been going out of style recently, but it's still a good way to get some extra performance out of your GPU. Here's how you do it.

2 All monitors degrade equally

Quite the opposite, as they have differing component quality and cooling systems

The other big myth to bust is that no, not every panel or monitor will degrade in the same manner, so you can't judge your individual monitor based on other experiences. Yes, you can get a rough idea of what to expect, but there will always be outliers that made it through the quality control process at the factory, and some retail units may degrade faster or in different ways than the norm.

Again, budget monitors or TVs are more likely to degrade differently because they have a much looser set of requirements before they ship. Higher-end or calibrated monitors might be more consistent, but they could also have individual flaws that weren't detected by the testing routine, and every panel is unique.

Monitors with thicker housings and vents could be less likely to fail, as the thicker size indicates better cooling of the panel, components, and backlight, but that could also be misleading you into complacency, and there's nothing inside with better quality than anything.

The power supply to the monitor may be running close to maximum, and the overclock may cause it to enter a fail mode. Alternatively, the chips inside could overheat and degrade, or the panel itself could degrade in unusual ways. The backlight might not be consistent either, and we've all seen LED strips burn out or change color due to issues with heat.

1 What you risk by overclocking your monitor

None of these issues is worth it unless you can afford to replace your display

Here's the thing that your monitor doesn't have that your CPU, RAM, and GPU does—safeguards. If you overclock your CPU too far, it may not boot, but it'll then load optimized defaults, leaving you with no permanent damage. While it's true enough that overclocking can permanently damage a CPU, the chances on modern processors is low, because there are multiple safeguards for current and voltages.

Your monitor doesn't have those safeguards, and really isn't intended to be used outside the factory settings. Trying to overclock for more performance can give any of these issues, and could even stop the panel from turning on:

- Heat-related accelerated wear

- Permanent visual defects

- Warranty voidance

- Reduced responsiveness if the scalar is overloaded

These were all true enough when CRTs or TN panels were the only available types, but now we also have OLED to worry about. Heat from using OLEDs at their factory settings can already cause burn-in and other undesirables, so what do you think overclocking would do to them? It's just not worth the risk anymore, not when high refresh rate panels are the norm, and 75Hz or higher is the baseline for even budget monitors.

Related

Why overclocking isn't as relevant as it used to be

Overclocking isn't nearly as popular as it used to be, and modern technology is to thank for that.

To me, it's not worth the risks of overclocking your monitor

PC monitors are one of the most costly parts of any build, and a good one will easily last multiple builds, way past when the warranty period expires. Both of my current monitors already have high refresh rates, with one at 144Hz and the other at 165Hz. Even if I wanted to try overclocking them for higher performance, I'm not sure I could as they already need to use DSC as they are close to the DisplayPort 1.4 bandwidth limits. Trying a custom resolution to get around the limits set by the drivers might damage something inside the monitors, and I don't want to risk that just for a little extra. Additionally, most games I play can't even reach 165 FPS, as the 1440p ultrawide I use for gaming requires significant graphical power to max out.

.png)

English (US) ·

English (US) ·