Hamlin has been in the tech field for over seven years. Since 2017, his work has appeared on MakeUseOf, OSXDaily, Beebom, MashTips, and more. He served as the Senior Editor for MUO for two years before joining XDA. He uses a Windows PC for desktop use and a MacBook for traveling, but dislikes some of the quirks of macOS. You're more likely to catch him at the gym or on a flight than anywhere else.

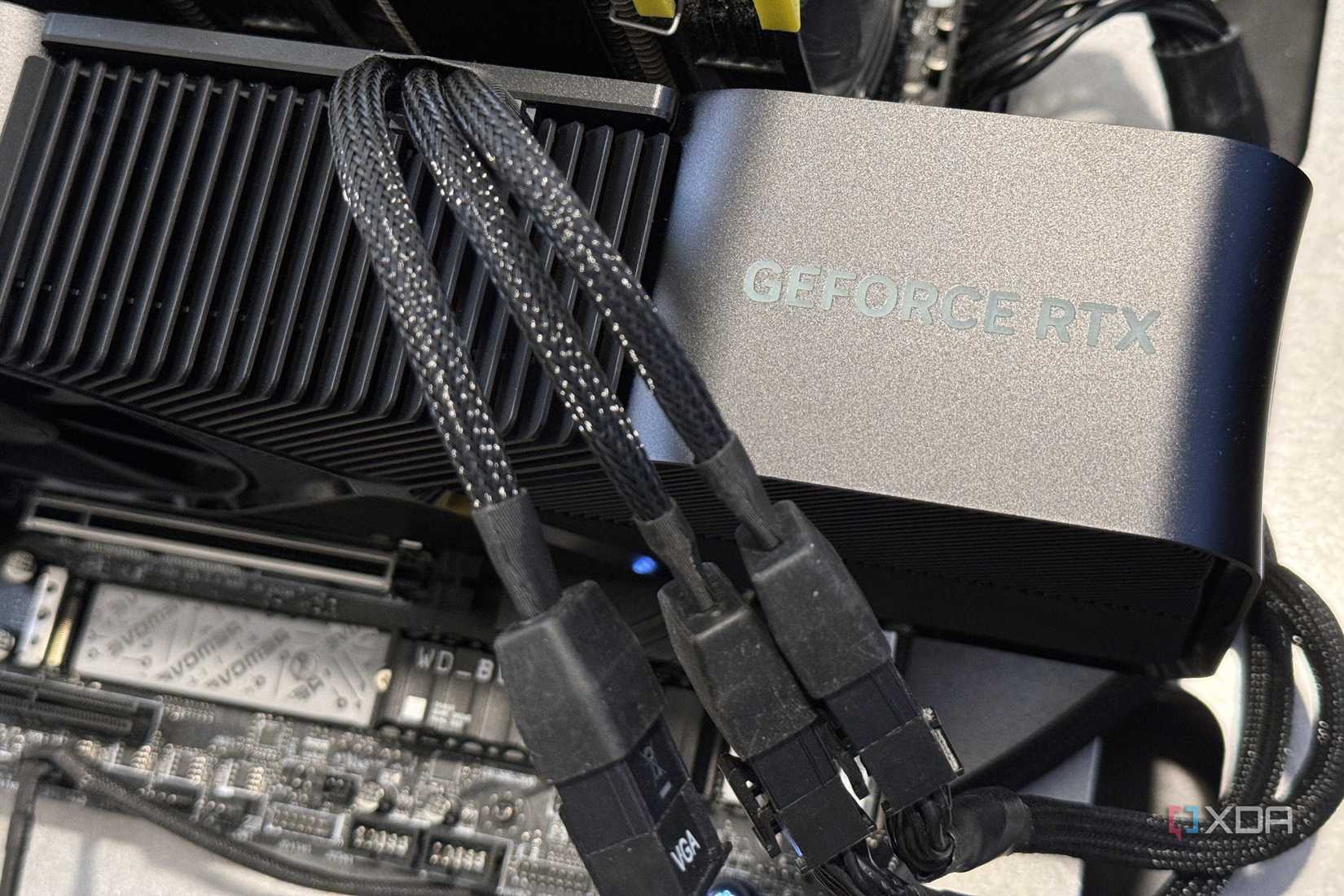

It's hard to resist the temptation to squeeze out every last bit of performance from a graphics card, especially when you've splurged well over a grand on a high-end model. After all, why leave free performance on the table when your GPU is capable of more with just a couple of quick tweaks? That was exactly the mindset I had when I decided to overclock my RTX 4090. Like I expected, my average frame rates went up across all the games I tested, but the downsides quickly made me realize it wasn't worth it.

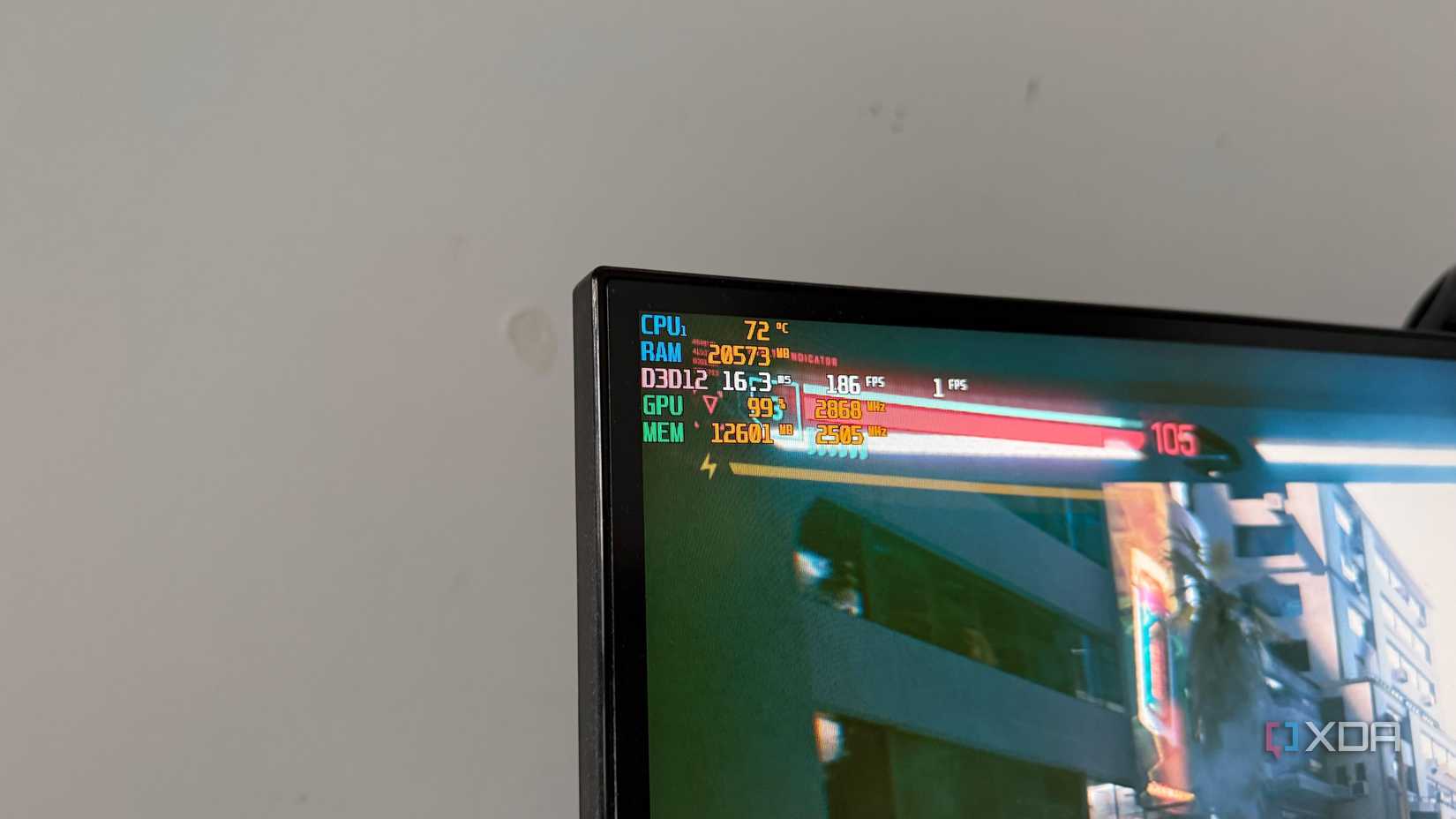

Of course, when you push your GPU's clock speeds beyond stock settings, the performance uplift is inevitable, but the cost becomes just as clear when you start monitoring your hardware. I had to deal with higher temperatures, louder fans, and extra power draw, all of which made those FPS gains feel insignificant. I thought I was improving my gaming experience by chasing higher frame rates, but in reality, I was creating unnecessary problems that could affect my GPU in the long term.

Higher temperatures

Those FPS gains really aren't worth my GPU running hotter

As much as I love chasing high frame rates, I also want my graphics card to stay relatively cool. I'm the kind of person who starts panicking the moment temperatures creep past 80C while gaming. Naturally, this was the first thing I noticed after I overclocked my graphics card using MSI Afterburner. At stock settings, my RTX 4090 never exceeded 75C no matter what game I played. But after increasing the core and memory clock speeds, it touched 80C within minutes.

Sure, my GPU wasn't throttling at this point, but I knew that if I kept these settings, I'd eventually push it closer to that limit. Since I play games for several hours a day, that extra heat would only accelerate thermal paste degradation in the long run. The last thing I want to do is take the card apart, risking the warranty, just to reapply fresh paste. If I had to choose between a 100-150MHz bump in GPU clock speed and keeping its temperature consistently below 75C, I'd take the lower temps every single time.

Louder fans

You could argue that a GPU running at higher temperatures isn't obvious unless you're monitoring it with software like MSI Afterburner, but the truth is, the fans tell me everything I need to know. As soon as temperatures approach 80C, at least when it comes to my RTX 4090, the fans spin more aggressively to try to keep it cool. And if I'm not wearing a headset, the extra noise becomes impossible to ignore, especially during quieter moments in single-player games.

The thing is, when you're used to your PC's normal noise levels, any sudden increase in fan speed stands out immediately. And it's not even just about the noise. The fans ramping up serve as a constant reminder that my GPU is running hotter than it should, and it immediately makes me want to check the exact temperature using monitoring tools. At that point, all I'm thinking about is the GPU temperature and not the marginal FPS gains I get from the overclock. If anything, this kills the immersion and makes me regret pushing my card beyond its stock limits.

Increased power draw

Consuming more watts for minimal FPS gains doesn't feel like a win

Modern GPUs, especially high-end models like the RTX 4090 and 5090, are very power-hungry even at stock settings. For instance, my RTX 4090 draws 350–400 watts when I play AAA games. However, after I overclocked it, I noticed that the power draw often exceeded 450W and sometimes even reached 500W, depending on the game. A 50- to 100-watt increase may not seem like a lot at first glance, but that's actually a 25% jump in GPU power consumption.

What makes this increase a tough pill to swallow is how little it did to improve gaming performance. At best, the FPS gains were around 5–7%, which is barely even noticeable, especially when most games already run well above 60FPS. I felt like my card was wasting electricity, generating more heat, and stressing my PC for almost no real-world benefit. That extra power draw only made my GPU less efficient, and I'm sure that if I had kept those settings, my electricity bills would've slowly added up over time.

Stability issues

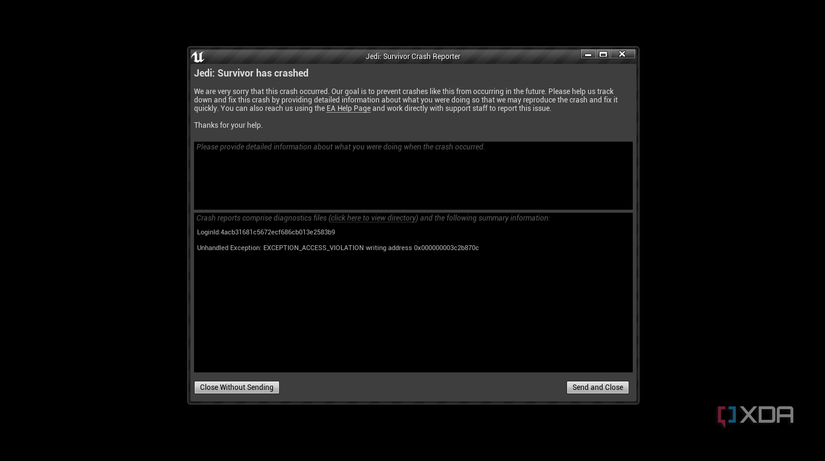

I can't enjoy gaming when I'm constantly worried about crashes

What frustrated me more than the thermals when overclocking my RTX 4090 was the random crashes I had to deal with, even after spending time testing a few games for stability. At first, my settings seemed perfect since I could play all my favorite competitive FPS titles without a single crash. But later on, when I installed newer and more demanding AAA titles, the problems started to show, especially during extended gaming sessions.

Nothing kills the immersion faster than a game crashing randomly while you're in the middle of an important mission. That was my experience when I played Assassin's Creed: Shadows earlier this year. The crashes were so unpredictable that I eventually gave up and reverted to stock settings. At that point, I stopped caring about the extra frames I got from the overclock, because stability was worth far more than a minor bump in performance. If marginal FPS gains come at the cost of instability, you're actually hurting your experience, not improving it.

Undervolting gave me what overclocking couldn't

Looking back, I don't regret experimenting with overclocking because it showed me exactly what I didn't want out of my GPU. Sure, overclocking made more sense at a time when high-end GPUs weren't drawing over 400 watts, but modern options like the RTX 4090 and 5090 make it far less worthwhile. When your card is already power-hungry, chasing 5% more FPS comes at the cost of higher temps, louder noise, and random crashes that take away from your gaming experience.

That's exactly why I undervolt my GPU these days. I may not get an FPS boost, but I get a more efficient GPU that runs cooler and quieter. Yes, stability can still be a challenge, but if you take the time to tune your settings, you'll end up with a GPU that gives you peace of mind in the long run. If anything, lower temps help your GPU sustain its boost clock speeds for longer, which makes performance more consistent during long gaming sessions. Unlike overclocking, there's almost no real trade-offs.

.png)

English (US) ·

English (US) ·