I have been self-hosting several apps in Docker containers on my home server out of sheer convenience. Taking a leaf from the books of my XDA colleagues who use Docker in their home labs for productivity or streaming media, I started experimenting with containers months ago. I gather that many people may want to or have just started using Docker containers to self-host different apps. Like many, I started off with Docker CLI to test out some of the apps. However, after weeks, I found myself using an alternative method — Docker Compose — to install containers on my home server. Here are the reasons why I prefer using Docker Compose to deploy and manage containers.

5 Saving time while setting up multiple containers

Deploying all of them together

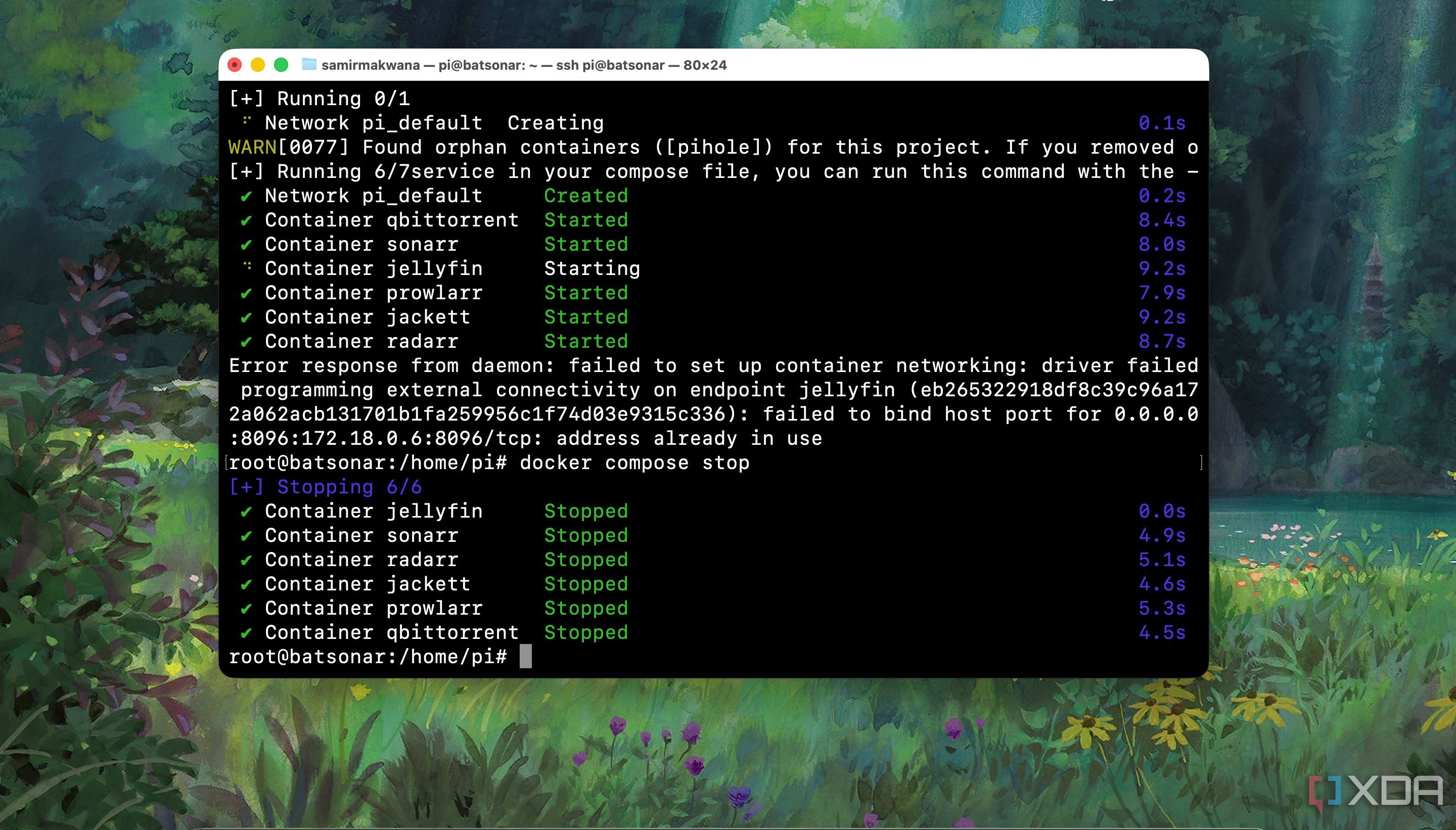

When I started with Docker, I tried several apps as containers on my home lab machine. Back then, I used to deploy every app, one container at a time. I did end up putting in a considerable amount of time. I recollect how complicated it was to type out longer commands in the Terminal just to deploy a container. Not to mention, it is tedious to tweak the same to adjust as per my configuration, even after copying and pasting them from a project’s GitHub page. Looking back at that, it was a tedious activity, and I could’ve planned better.

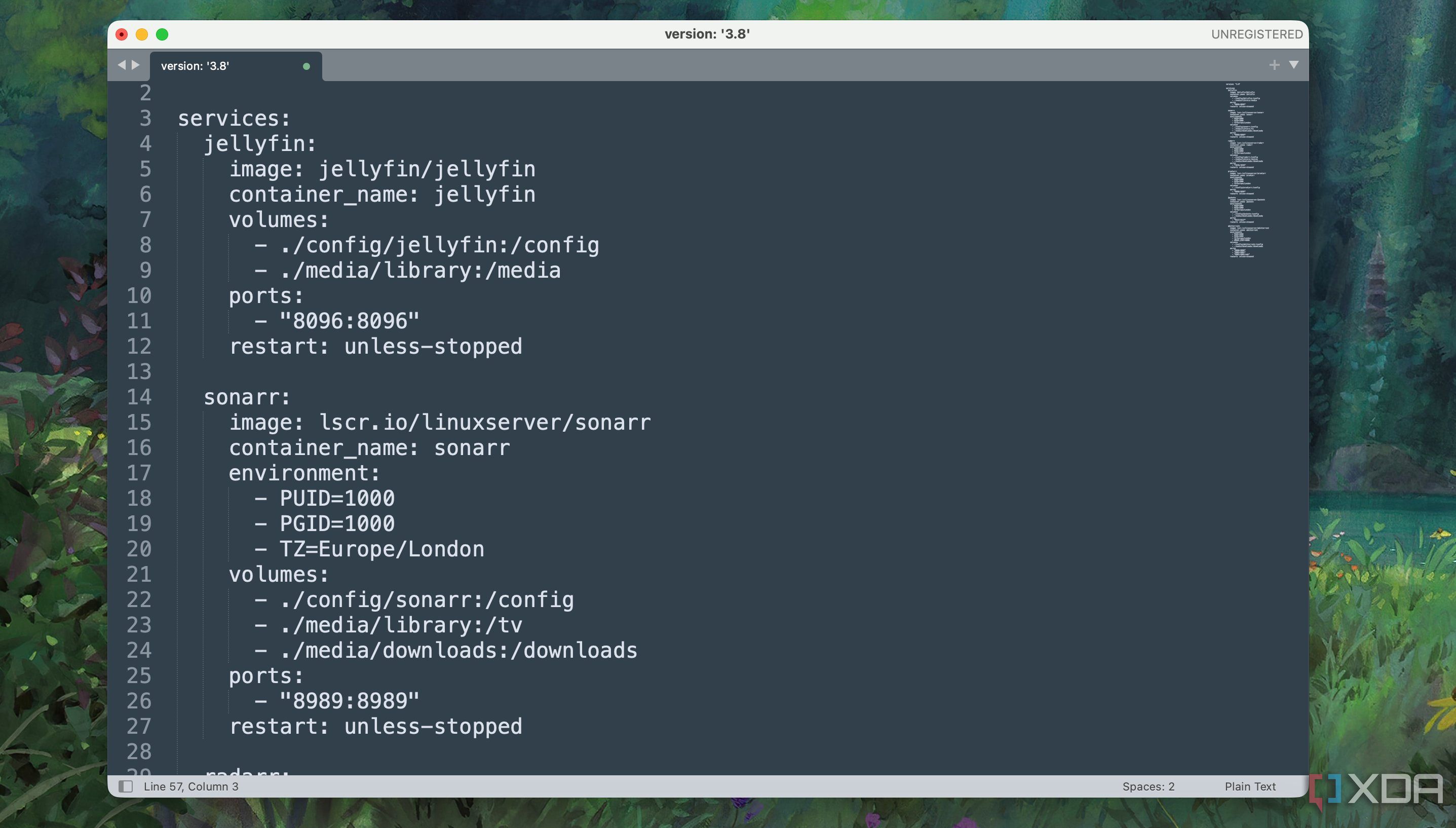

With Docker Compose, I now populate a single YAML file containing the configuration of containers in a text editor like Sublime Text. My experience with Docker also helps me tweak values to match my requirements, which rarely results in an error.

4 Simplifying the setup with select containers

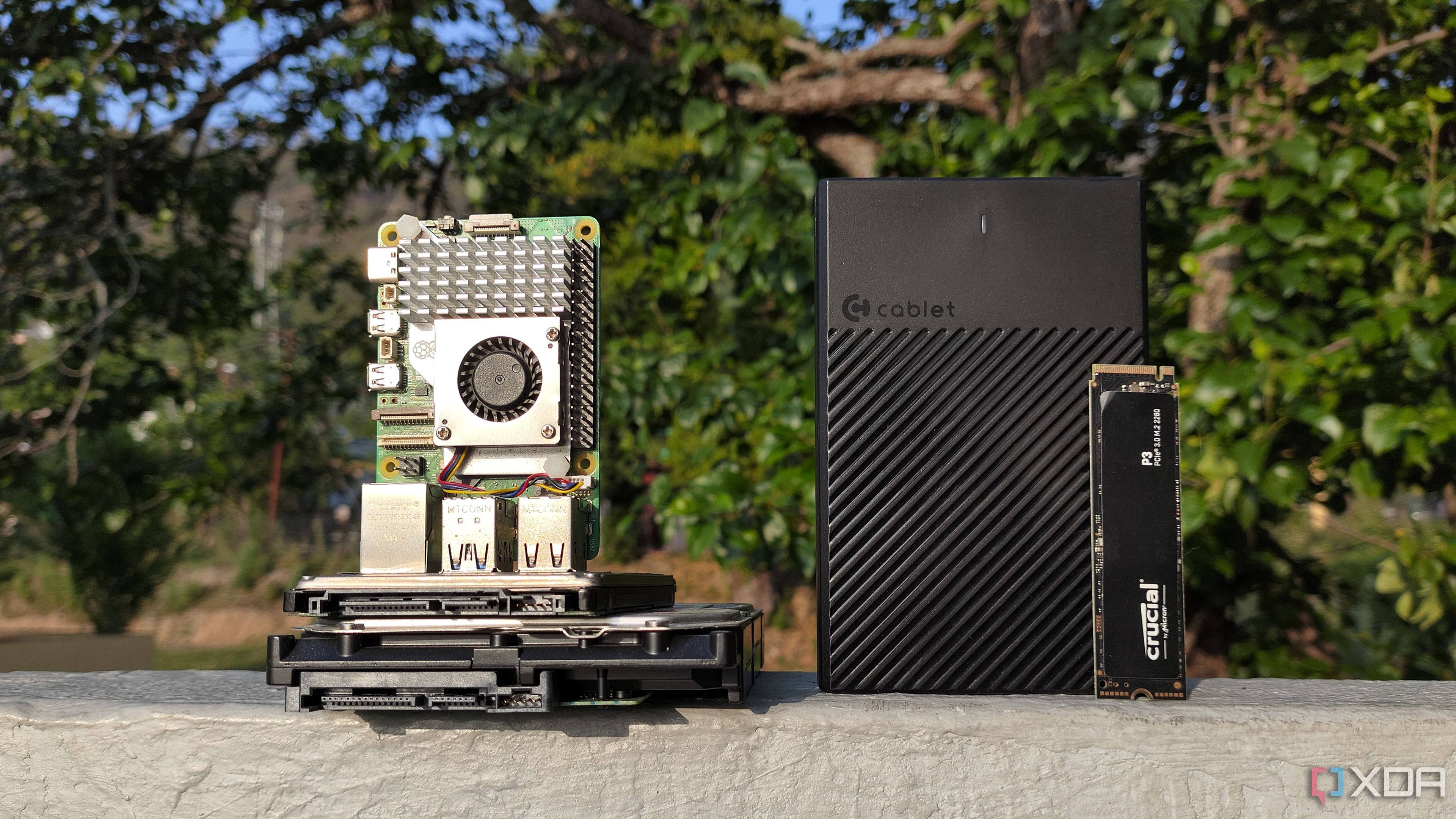

I often use a Raspberry Pi as one of my home server machines to try out different apps using Docker containers. I’ve realized that running multiple containers on such an SBC isn’t a bright idea because they consume significant system resources. Lately, I have turned the Pi into a media server and collated a media server stack consisting of apps like Jellyfin, Sonarr, Radarr, Audiobookshelf, and others, into a single YAML file. That made it easier for me to deploy all the containers in this media server stack together and get them running in no time.

Whenever I want to try out a new app, I run it as a separate container and don’t let it disturb my current setup. Also, I test the container on a VPS (virtual private server) before it makes it to the Raspberry Pi.

Related

I use these 4 tools to enhance my Docker experience

A basic Docker installation is fine and all, but these four tools can elevate its functionality to the next level

3 Managing multiple containers together

Simple commands to tame them all

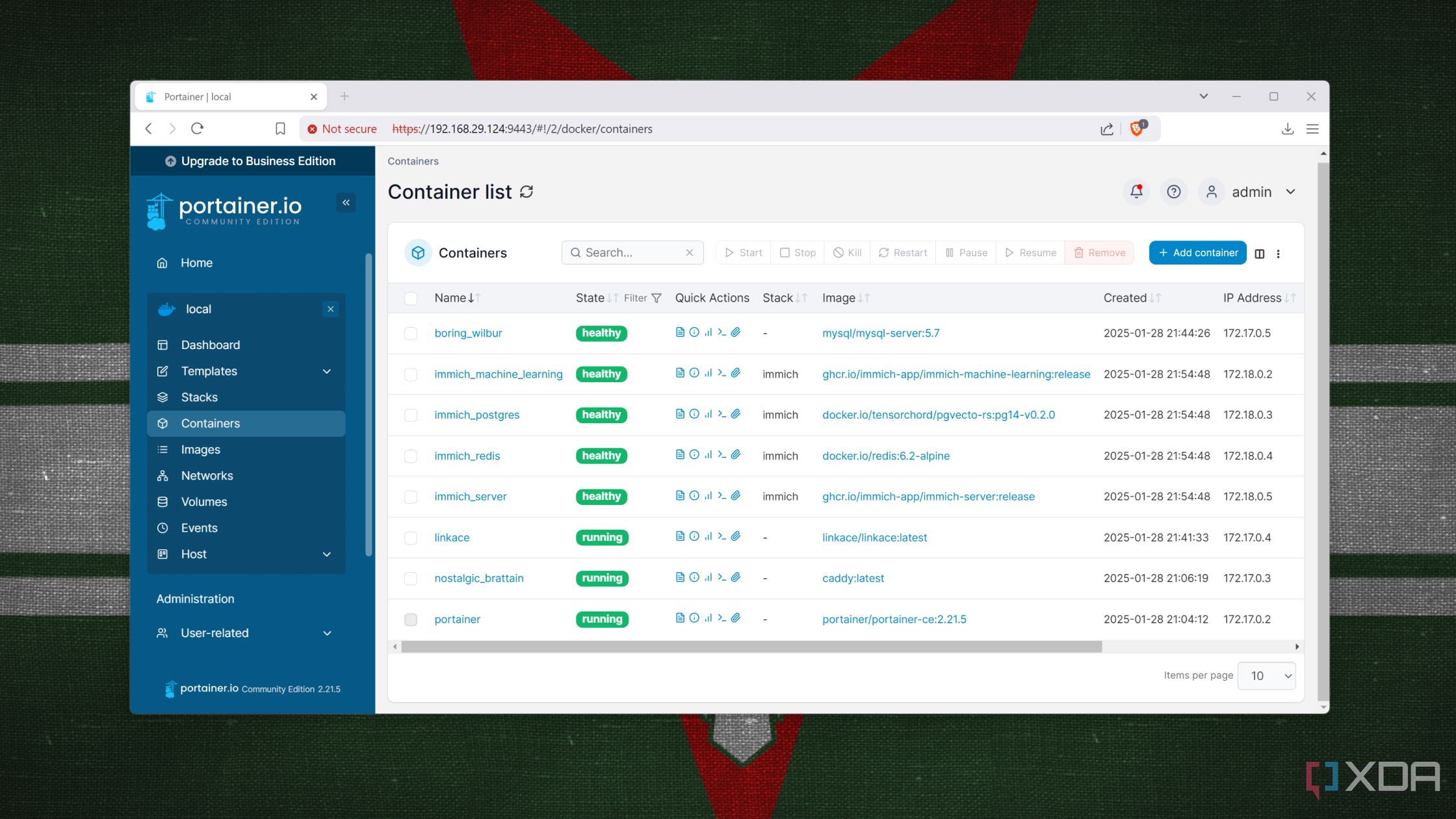

Previously, it took me quite a lot of back-and-forth troubleshooting each container when things went wrong or there was an issue with one of them. But now, a single YAML configuration file serves as a quick and easy reference, which also makes managing containers an easier task. Because every time I have to redeploy the containers, I feed in a simple command - docker compose up -d. Stopping or building containers has a similar suffix syntax - stop and build, respectively. I mostly end up using these basic commands to orchestrate and manage the containers together.

Whenever any one of the containers has an issue, referring to logs helps fix the configuration. Otherwise, I update the YAML configuration file and recreate all the containers.

2 Better volume management and networking

Safeguarding data and communication

Defining specific locations on my home server is helpful for retaining the data I use with the containers. So even when a container crashes or restarts, I still have its data. When recreating a container, the same volume directory is mapped to use the existing data within the container.

Running Docker containers individually allows them all to operate on a bridge network, and they communicate with each other by IP address. However, those IP addresses change every time the containers restart. To make the containers talk to each other easily by name and by DNS, I define a custom bridge network for all containers. Declaring a custom network helped when trying the *arr stack with apps like Readarr, Radarr,Lidarr, and others.

1 Updating all the containers in one go is easy

Although manually, it's not much of a hassle

An underrated benefit of Docker Compose is the convenience of keeping the stack of containers up to date. It doesn’t happen automatically, but you can always run a simple command - docker compose pull && docker compose up -d. I run it once a month and take care to update the relevant containers while their data remains intact in the respective volumes.

Personally, I prefer updating the containers manually and feel it is more convenient for my home lab set up over automated options like Watchtower and Diun. That’s because I use the containers of apps to stream media, not development.

Related

5 reasons Podman is better than Docker for self-host enthusiasts

If you're a self-host enthusiast and you're weighing up Docker versus Podman, I strongly recommend Podman.

A must-have tool to juggle Docker containers

For me, Docker Compose is the best way to deploy, manage, and update multiple containers, especially in a home lab. Also, a common YAML file to run the same stack of containers across different machines streamlines installation, and I can reuse them on other systems. I am still far from using too many machines, so I need to seek refuge in Docker Swarm to orchestrate all of them. Besides, I continue using the Docker CLI to test single containers temporarily and haven’t given up on it entirely.

.png)

English (US) ·

English (US) ·