There's one sentiment that's been consistent among PC gamers for nearly two years: 8GB graphics cards just don't cut it for a modern gaming rig. Games have gotten more demanding, and even at 1080p, there are titles that can fully saturate an 8GB frame buffer. Running out of VRAM doesn't always look the same, though.

It's a tricky issue to drill down on due to how VRAM works, how games utilize VRAM, and how a stuffed frame buffer manifests as performance or graphical issues in the games you play. If you run out of VRAM, you're going to have a poor gaming experience. The range of "poor" is just broad.

Understanding VRAM: a quick primer

Your GPU's memory is more than its capacity

I have some examples of what running out of VRAM looks like in real games, but it's important to understand what's going on behind the scenes first. Your GPU is its own processing unit. It has a processor, the GPU, along with a board and dedicated memory. Just like your CPU, there's a system of storage running through your GPU. A bit of cache sits right next to the processing unit, which is extremely fast but has limited capacity. Your VRAM has more capacity, but it's slower. Just as data flows from your storage to your RAM to your CPU cache, data flows from your storage to your VRAM to your GPU cache.

That fact alone already complicates the idea that 8GB graphics cards aren't enough for modern games. For instance, the RTX 4060 Ti and RTX 3060 Ti both have 8GB of memory, but the RTX 4060 Ti includes 32MB of L2 cache; eight times as much as the RTX 3060 Ti. Both of these cards are limited to 8GB of memory, but the limitations of that memory configuration look different.

Further complicating matters is how VRAM usage is reported. If you're playing a game, your VRAM should be used exclusively for that game, minus a small bit being reserved for system functions. Some game engines are designed with this idea in mind. A great example of that is the Infinity Ward engine used in Call of Duty releases. As you can see from a performance analysis of the recent Call of Duty: Black Ops 6, the game can consume over 10GB of VRAM at 1080p. Despite that, cards with more VRAM like the RX 6700 XT don't offer a performance advantage over cards with less, such as the RTX 3080.

The Infinity Ward engine, and many others, allocate as much VRAM as possible, even if it isn't being used. If you have 8GB of VRAM available, a game like Call of Duty: Black Ops 6 will reserve all of it at the highest graphics settings, even if the VRAM isn't being used. That's a good thing, too. By allocating the memory ahead of time, games can bypass issues that can arise when trying to allocate and fill a section of VRAM on the fly. Instead of always adjusting the VRAM usage, many games will take up as much memory as they need, and if that line happens to be over the amount of VRAM you have on your GPU, it'll look like your capacity is maxed-out.

Regardless, there are situations where you will truly saturate your frame buffer, meaning all of your VRAM is actually in use while rendering frames. In this situation, the data your GPU needs doesn't just disappear. It overflows into system memory, which alone reduces your performance and adds latency. On top of that, your CPU now has to step in to feed that data to the GPU, causing even more latency. When you truly run out of VRAM, it usually manifests as stuttering or single-digit frame rates. But not always.

Handling VRAM limitations gracefully

Limitations? What limitations?

Just because a game calls for more than 8GB of VRAM doesn't mean you'll have a bad experience on an 8GB graphics card. A great recent example of that is Doom: The Dark Ages, which puts on display advancements in rendering that reduce the pressure on your graphics card's VRAM. That's because of the texture streaming system in the game. Rather than a set texture quality level, the id Tech 8 engine uses a texture pool that you're able to adjust the size of. You're basically telling the game how much VRAM it can set aside exclusively for streaming textures.

Doom: The Dark Ages heavily relies on asset streaming, and because of that, it doesn't run into strict performance limitations on 8GB graphics cards. In my testing of the game, the 8GB RTX 4060 was able to hold up without any issues, even at 1080p with the highest graphics preset. With the same settings, the 24GB RTX 5090 can easily gulp down 11GB or more of VRAM, but due to how id Tech 8 is designed, you wouldn't notice a difference solely based on VRAM capacity.

To demonstrate this, above, you can see three systems with identical settings in Doom: The Dark Ages. From left to right, there's the AMD Ryzen AI Max+ 395, followed by the RTX 4060 and the RTX 5090. The two desktop GPUs look identical, but the Ryzen AI Max+ system shows a lower-quality gun texture, despite the fact that the integrated GPU in that chip technically has more VRAM available to it than the RTX 4060. Doom: The Dark Ages, and id Tech 8 more broadly, can scale up or down depending on the hardware that's available to it, and it does so gracefully.

Related

Exploring the gory brilliance of id Tech 8 in Doom: The Dark Ages

Doom: The Dark Ages and id Tech 8 are gorgeous is the most disgusting way possible.

Sacrificing image quality to maintain performance

8GB GPUs are fine! Just don't look at the textures, please

Examples of games that handle VRAM limitations so well that you shouldn't notice them are few and far between. A step down from those games are titles that won't sacrifice performance when you run out of VRAM, but they will sacrifice image quality. These games use a streaming system similar to Doom: The Dark Ages, but they're not as robust or quick as id Tech 8's implementation. Instead of dropping the performance, you'll see comically bad issues with texture pop-in where low-quality textures will remain on screen for several seconds before snapping into the full resolution.

There are a few good examples of games that run into this issue, such as Halo Infinite, Warhammer 40K: Space Marine 2, and Redfall. The texture streaming can't keep up, and rather than overflowing to system memory and hurting performance, the game just settles for low-quality textures until capacity is available to stream in the higher-quality versions. Although you won't see a significant performance drop due to VRAM limitations right away, this is still a pretty bad experience. You can reach a point where you're running around seeing smooth blobs of what an object should be, and it feels like the game is broken.

In these games, the VRAM limitation comes down solely to texture size, so you can normally solve the streaming issue by lowering your texture quality. That'll make the game look worse, of course, but it gets around the distracting pop-in you'll see.

Devolving into an unplayable mess

We've been here before

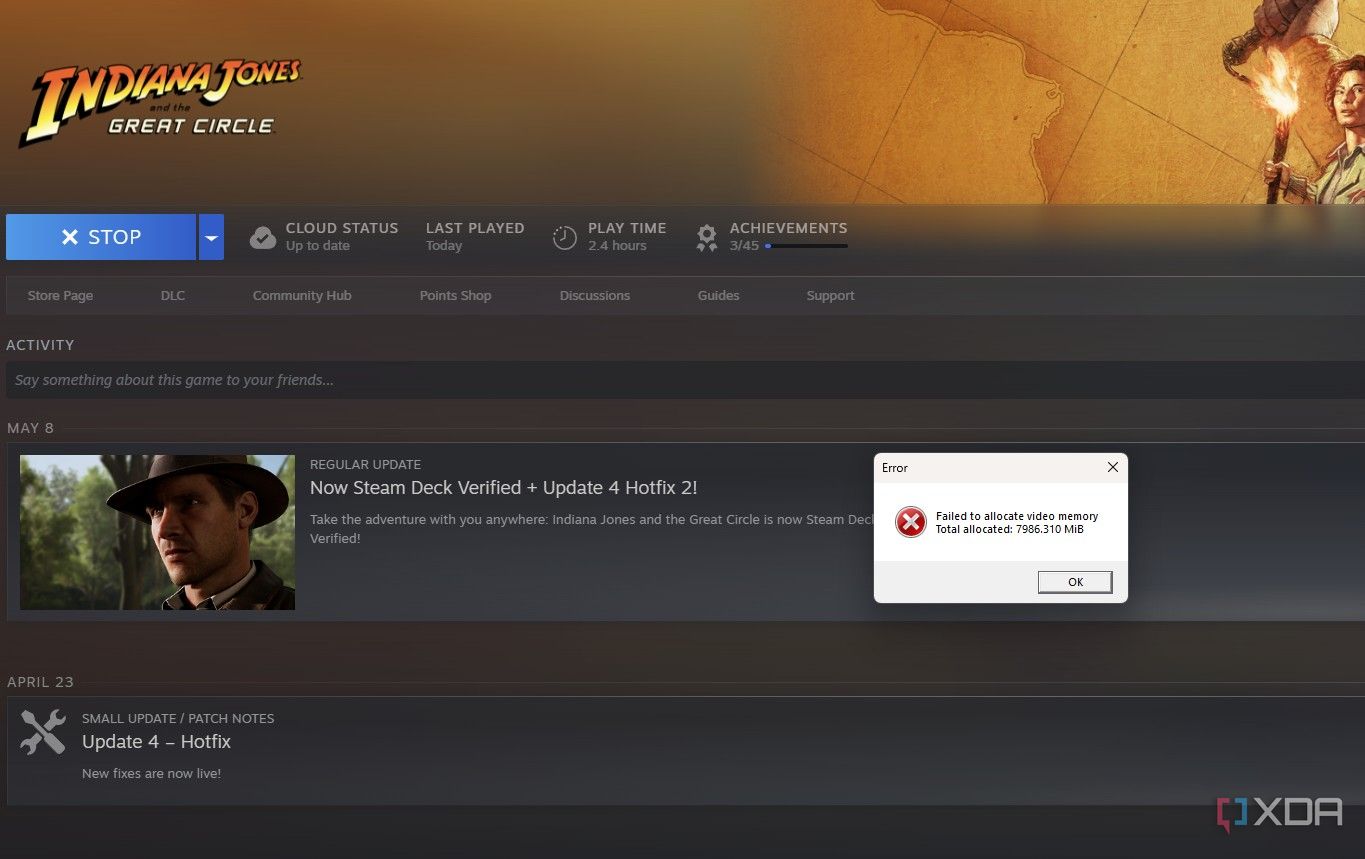

The majority of games with VRAM issues, and the ones that you'll consistently see referenced when discussing 8GB graphics cards, are the ones that run into a stone wall with VRAM and go out to system memory, severely hurting your performance. There are a ton of examples of games that fall into this camp, including Stalker 2, Indiana Jones and the Great Circle, Hogwarts Legacy, and The Last of Us Part 1. Resolution and texture quality play a critical role in VRAM usage regardless, but in these titles, they often make the difference between playable and unplayable performance.

There really isn't a range of performance issues when a game becomes limited by VRAM. You won't see a slight drop in performance with less VRAM. Rather, you'll see your performance completely tank. The first few hits to system memory will manifest as stutters, but more often than not, the continual requests for system memory will bog down the system so much that you'll slip into single-digit frame rates. On the RTX 4060 in Stalker 2, for example, my frame rate was so low that it would take two or three seconds for the game to respond to any input. I was running at 4K, which isn't where you'd play this game on the RTX 4060, but it demonstrates the point.

Tools like upscaling can help reduce the strain on VRAM since you're rendering the game at a lower resolution, but other performance-enhancing features like frame generation can actually put more strain on VRAM since you're holding a generated frame in buffer.

When you run into performance limitations like this, your VRAM is essentially creating an extreme bottleneck, and in these situations, you'll see cards like the RX 6700 XT with a 12GB frame buffer outperforming something like the RTX 3070 Ti with an 8GB buffer, despite the RTX 3070 Ti holding a more powerful GPU.

You don't need more VRAM (until you do)

There's been a lot of criticism directed towards 8GB GPUs, and in particular, Nvidia's dedication to them at the lower-end of its product stack. Some of that is justified. There are numerous clear examples of when 8GB graphics cards aren't enough to hold up in games. However, there's nuance to the VRAM discussion.

Limitations don't always show up in the same way, if they show up at all. And with recent changes to cache and texture streaming, one 8GB GPU may hold up to certain games better than another. It's a good idea to buy a GPU with more than 8GB of VRAM if you're playing above 1080p, but if you're sitting on an 8GB card and still getting the performance you want, that's all that matters.

.png)

English (US) ·

English (US) ·