Home Assistant is one of the best ways to automate your smart home, and the Open Home Foundation that owns and governs its development is always pushing new updates and improvements. Alongside Home Assistant are a couple of other add-ons also managed by the same developers, and one such integration is Piper. It's a local text-to-speech engine, capable of using any compatible model (such as GLaDOS) to synthesize speech for use with a local voice assistant. Now, it's just received a huge update which will make using it with a local large language model (LLM) a significantly better experience.

The update, rolled out as part of Piper version 1.6.0, is highlighted in the official changelog as "Add support for streaming audio on sentence boundaries." What this means is fairly simple; rather than waiting for the entire stream of text to be sent to Piper and then synthesizing voice, it will start as soon as it reaches the end of the first sentence, which should massively speed up speech in many instances, particularly in those instances where a local LLM is being used to generate responses. It should still shave off some time when using cloud-based AI as well.

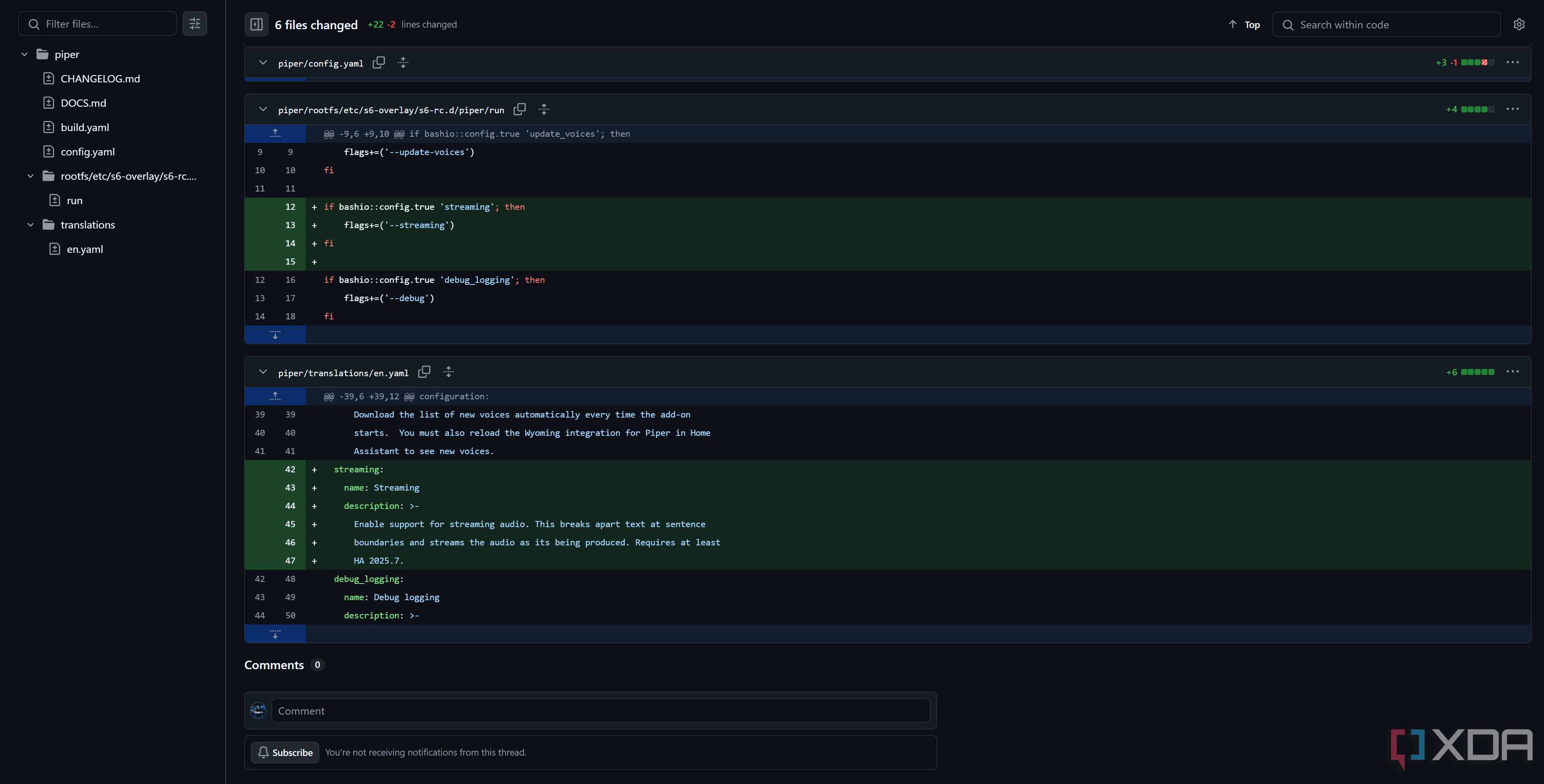

Piper's latest update can be installed right now and the toggle is already available in its configuration settings, but there's a slight catch: most people won't be able to use its streaming capabilities just yet.

Home Assistant's July update is a requirement for Piper's streaming support

It won't be out for another couple of weeks

Because of the interconnected nature of many of the official Home Assistant addons and integrations, it's not particularly surprising that, sometimes, a new feature in an addon may require an update to Home Assistant first. That's exactly the case here; unless you're on the beta branch, you're going to need to wait a couple of weeks before the feature actually becomes active. The following string was added to the English translation files for Piper:

Enable support for streaming audio. This breaks apart text at sentence boundaries and streams the audio as its being produced. Requires at least HA 2025.7.

Home Assistant follows a monthly release schedule, with the most recent update, at the time of writing, being Home Assistant 2025.6.3. New versions of Home Assistant are typically scheduled for the first Wednesday of the month, with a week of beta testing before it. This means that the July version of Home Assistant, 2025.7, is expected to drop officially on July 2nd, with the beta scheduled for June 25th. So, if you're on the beta branch, you may only need to wait a few hours, but most users will need to wait a week before they can start using it.

As for why this matters and is a big upgrade for local LLM enthusiasts, previously, you'd need to wait for the entire generation of the response to complete before Piper would start generating the audio to match it. If the server handling your queries, such as a home server, was slow at generating text, you could be waiting even tens of seconds in extreme cases to hear a response to a query. With this change, speech will now be streamed once the generation of the first sentence has been completed, so you'll start to hear a response from your voice assistant even when the rest of the text is still generating in the background.

As for why this works, typically, speech is comparatively slower than the tokens per second capabilities of many machines powering a local LLM. For example, my home server can be on the slower side when it comes to text generation, yet the words still generate faster than a voice assistant would actually read them. This way, I can use streaming audio and get a response much faster, even though the text hasn't finished generating yet. It won't just be local LLM users seeing an upgrade either: when it comes to using cloud-based AI, like OpenAI's GPT models or Google's Generative AI platform, it'll shave off a small bit of time when it comes to responses, so that you can hear the answer faster.

This is a massive update to voice assistants in general when it comes to Home Assistant, and you'll be able to use it come July 2nd on any system utilizing Piper. If you're not on the beta branch, you'll need to wait, but I personally can't wait for it to arrive and to start playing with it.

.png)

English (US) ·

English (US) ·