Home Assistant is a fantastic software package that basically hands you complete control of your smart home. With support for limitless integrations, most software can just work in a way that you can tangibly improve your life. With the advent of Large Language Models (LLMs), too, the possibilities when it comes to processing data points into something useful are endless. That's why I combined an LLM with my Home Assistant, and it's made a lot of things possible, including dynamic notifications.

There are a few different ways to configure this, depending on your hardware capabilities. The first, and arguably best option, is to use a tool like Ollama to host an LLM on your computer or home server. Home Assistant has an Ollama integration that can call the LLM and make requests to it. The downside is that you do need the hardware and free resources to pull it off, so if you can't, the next best option is to use a cloud-based provider.

In the world of cloud-based providers, you can use either Google's Gemini API or OpenAI's ChatGPT. Gemini has a free tier in its API that will work for most people and gets the job done, but if you're looking to do more (and frequently), setting up the ChatGPT API with gpt-4.1-nano is an incredibly cost-effective way to pull this off, too. Whatever way you opt to integrate your generative AI model doesn't change how we automate the process.

Selecting our source data and setting up our process

The process will differ depending on what you want to do

The first thing you'll need to figure out is what goal you have in mind to accomplish. Do you want a specific weather report that tells you what clothes to wear when you wake up in the morning? Do you want a day summary from your calendar? Or do you want an hourly report that contains key sensor data that you care about most? All of these are easy to accomplish and simply require passing the data to your LLM in the request you make. We'll go with a weather report that suggests what type of clothes to wear for this example, and you can apply the same process for your other needs.

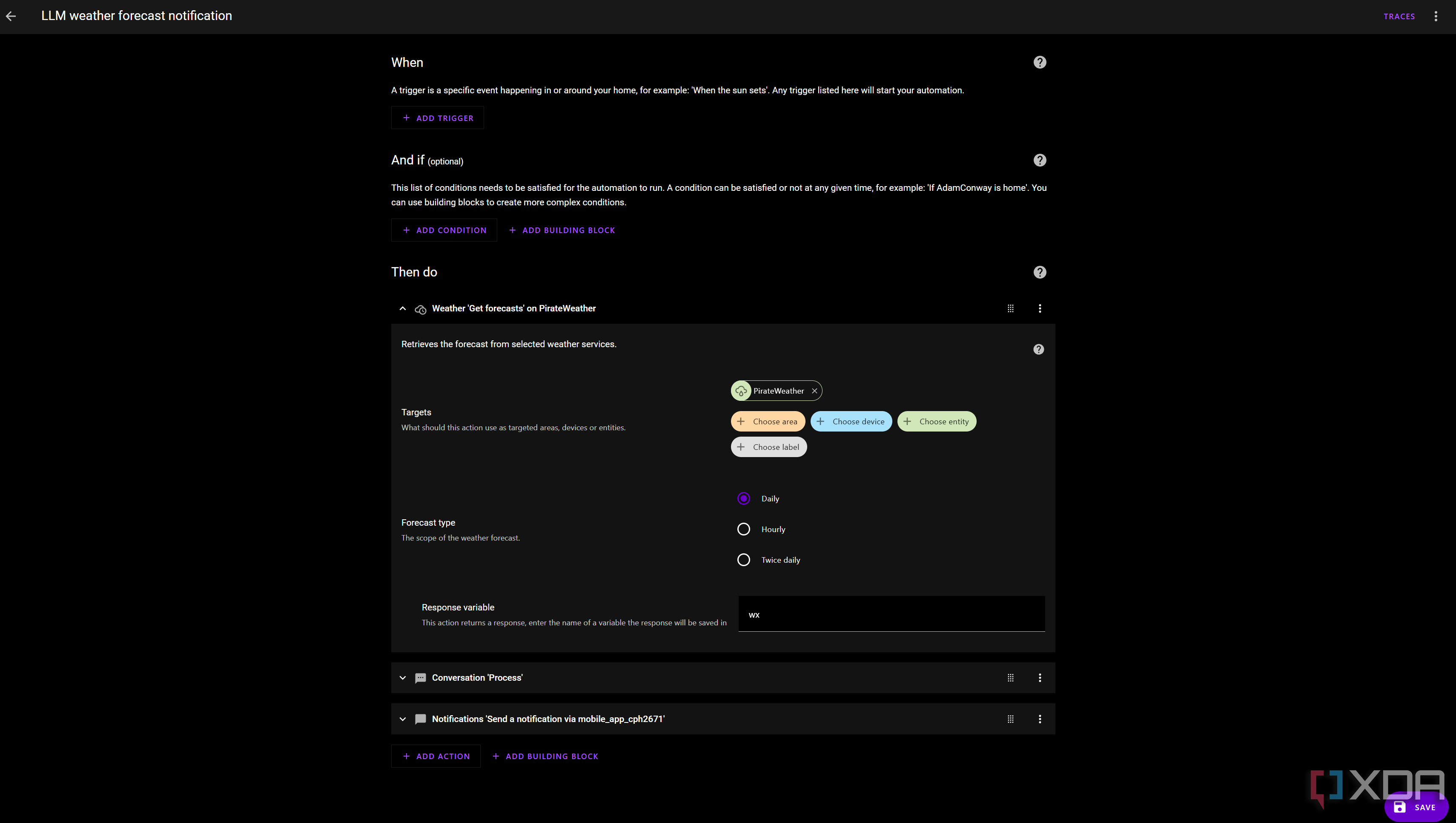

The first thing we'll need to do is visualize a pipeline for how our data will be retrieved. We need a way to retrieve our forecast, we need to then send the data to our LLM, save the response, and then use the response in a notification to our device. Assuming you already have an integration that incorporates a weather forecast (such as AccuWeather or PirateWeather), we can use the built-in "Get Weather Forecasts" action in Home Assistant to retrieve the data in a format that can be turned into a JSON object for our LLM. We'll save the response to a variable called "wx", which only exists in the context of our automation.

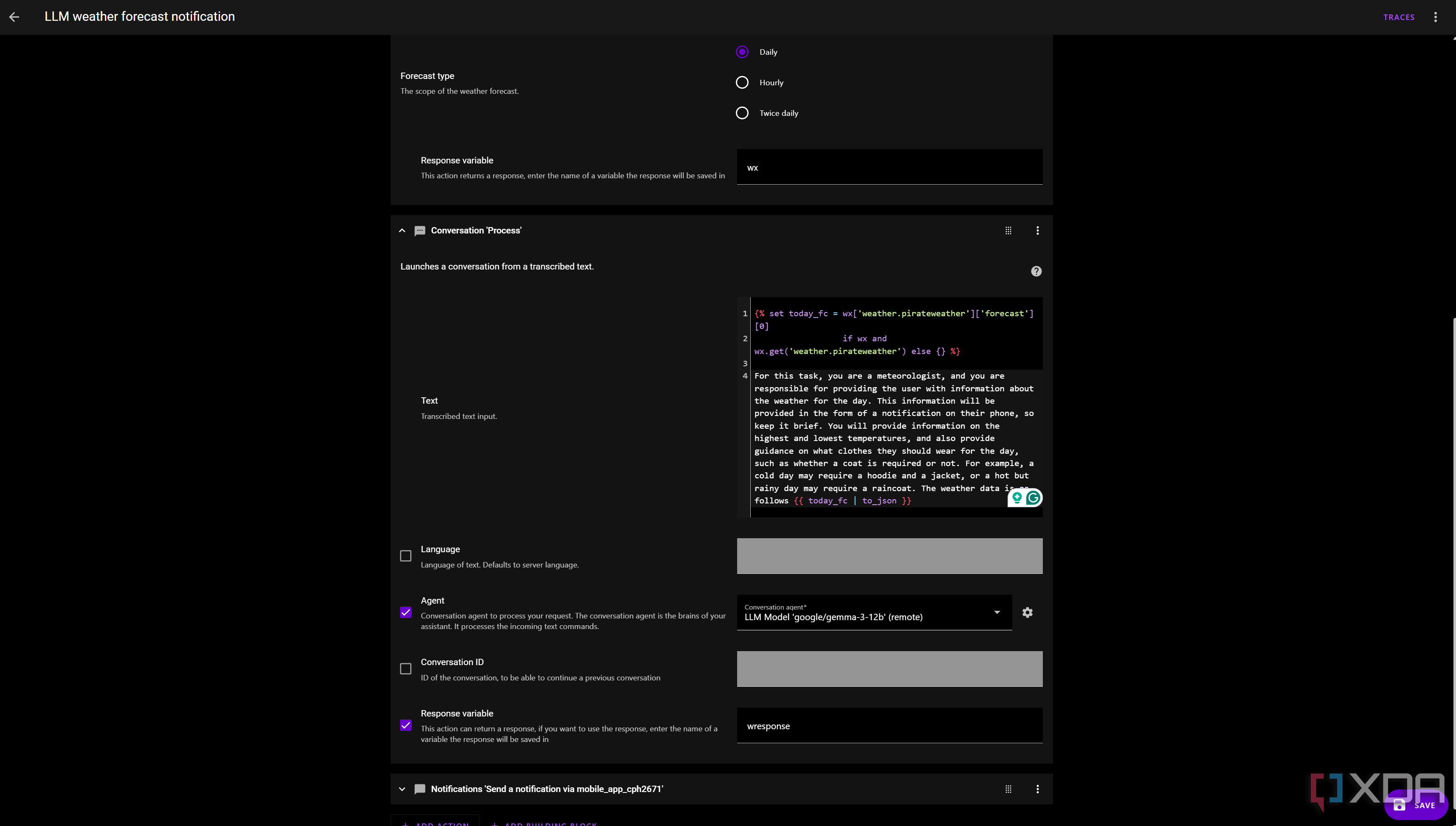

Next, we'll need to process the data to get just today's forecast. By default, you'll get a response that contains the forecast for the next week, starting at day 0 (today) and going to day 6. We'll create a "Conversation: Process" action, pointing to our LLM, with just today's data in the forecast.

In the "Text" box, start with this:

{% set today_fc = wx['weather.pirateweather']['forecast'][0]if wx and wx.get('weather.pirateweather') else {} %}

This sets the value of today's forecast to be the value of today's forecast from our "wx" value we saved earlier. My prompt after the above line is as follows:

For this task, you are a meteorologist, and you are responsible for providing the user with information about the weather for the day. This information will be provided in the form of a notification on their phone, so keep it brief. You will provide information on the highest and lowest temperatures, and also provide guidance on what clothes they should wear for the day, such as whether a coat is required or not. For example, a cold day may require a hoodie and a jacket, or a hot but rainy day may require a raincoat. The weather data is as follows {{ today_fc | to_json }}The bit at the end turns today's forecast that was saved to "today_fc" (from "wx") into a JSON object that can be passed to our language model. We then set our conversation agent to whatever LLM you want to use, and we set our response variable to "wresponse".

Finally, we can either save this to a variable for later retrieval or directly pipe this response to another service, such as a notification. Using Home Assistant's built-in notifier, the text of the notification should simply be the following:

{{ wresponse.response.speech.plain.speech }}Now run your action at the top right. If it works, you should receive your notification once everything is processed. If it doesn't work or is taking too long, you can debug it by clicking Traces at the top right to see what the issue is.

Dynamic notifications are a lot of fun

Get creative with it

I've been having fun with dynamic notifications, and you can use them for literally anything. I've seen people use them in creative ways, such as notifying someone when their plants need watering, and I already use regular notifications for my work meetings, too. Mine currently acts like GLaDOS from Portal, but you can be as creative as you like. Another common setup is to pair it with Frigate, so that your snapshots are sent to the LLM for processing to describe what's in the image.

There's a whole world of opportunity when pairing a self-hosted LLM with Home Assistant, but ChatGPT is an inexpensive way to do it and Google's Gemini has entirely free usage every day up to a certain limit. Give it a try!

.png)

English (US) ·

English (US) ·