Ever since I stepped into the home lab landscape with Proxmox, I’ve tinkered with multiple server-oriented distros, including the Xen-based XCP-ng, enterprise-grade Harvester, and even Unix-powered SmartOS. However, ESXi remained the only platform I never got to tinker with, as Broadcom had gotten rid of its free license by the time I bought some spare PCs to run VMware’s flagship virtualization platform.

As luck would have it, Broadcom ended up reinstating the free version of ESXi a month ago. With my home lab in a better state than it has ever been, it was finally time to give ESXi a shot. Unfortunately, ESXi refused to work on most of my devices, and the only x86 SBC that managed to run the platform barely had four cores for me to work with. So, I did what any other self-respecting home lab lover would: virtualize ESXi inside my Proxmox workstation, of course. To my surprise, it works really well, and I'd go so far as to say that it’s my favorite way of tinkering with the hypervisor.

Related

Setting up a home lab? Check out these 5 incredible operating systems

Versatile and powerful, these 5 operating systems can push your home server's capabilities to the next level

Getting ESXi to run on my devices was a nightmare

And I don't want to spend a fortune on yet another NIC

If you’ve read my recent ESXi articles on XDA, you may already know the hullabaloo surrounding its incompatibility with consumer-grade NICs. But for those who haven’t read about my misadventure with setting up ESXi, here’s a brief overview of how everything went down.

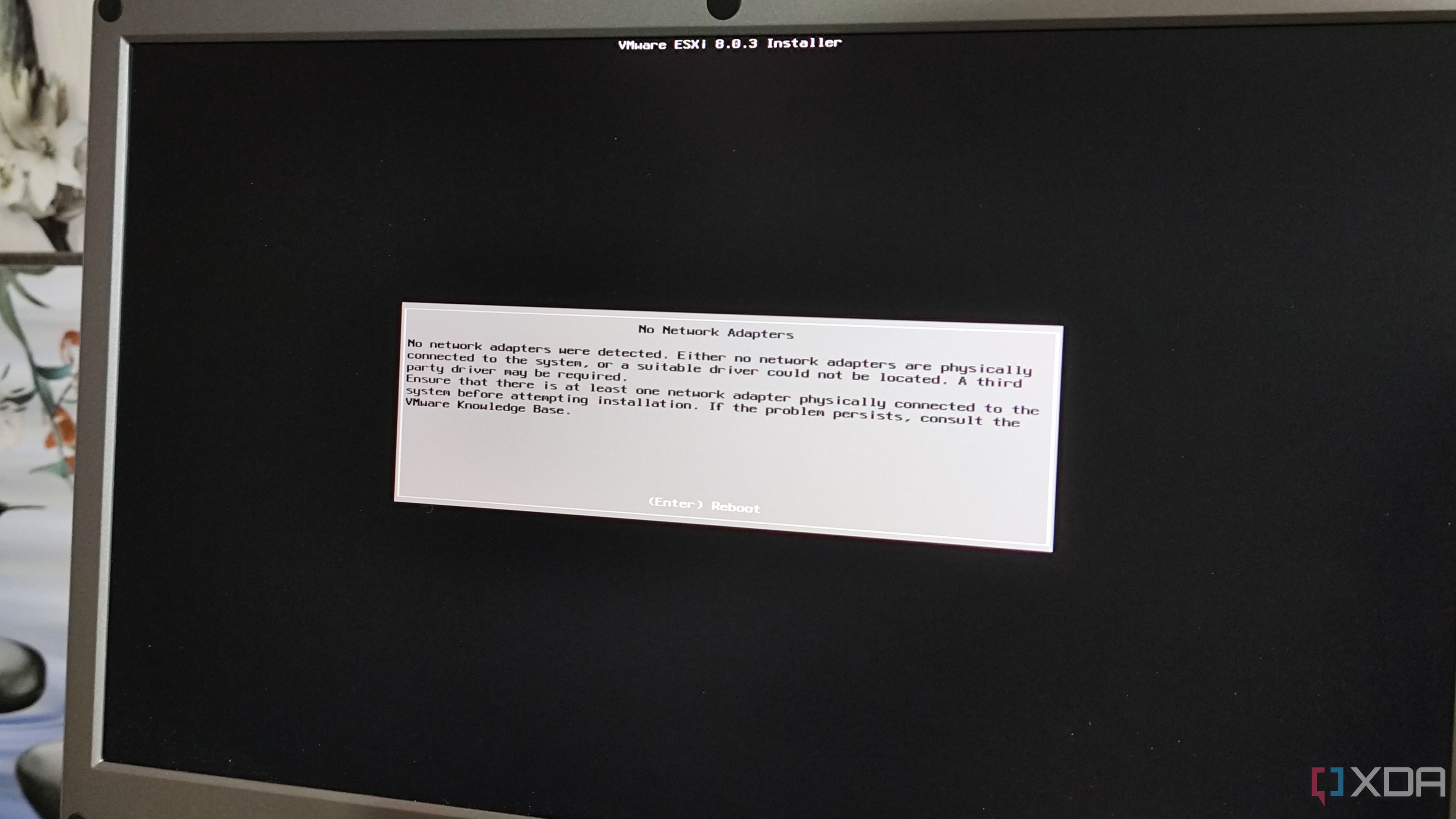

Despite starting the installation process on a high note, the ESXi setup wizard failed to detect the network card inside my ASRock B550 Phantom Gaming motherboard, and my 10GbE TX401 NIC and TP-Link USB to Ethernet adapter proved just as useless.

The NAS devices in my setup didn’t fare any better either. Truth be told, I would’ve excused ESXi's lack of support for consumer-grade Ethernet controllers, as it’s something you’d typically use in an enterprise setup. Heck, its biggest FOSS rival, Harvester, requires a 16-core CPU and 32GB memory at the bare minimum, and you’ll need twice as many cores and RAM for a production-tier setup.

But the real surprise was that even my dual-CPU Xeon workstation’s Ethernet controller proved insufficient for ESXi. In the end, I had to resort to running ESXi on my ZimaBoard 2, as its Intel-based Ethernet controller was the only device in my entire home lab that didn’t display the No Network Adapters error message during the installation sequence. Unfortunately , the device had its own set of issues that prevented me from using it as the test bench for ESXi.

Virtualizing ESXi is a straightforward process

Long live nested virtualization

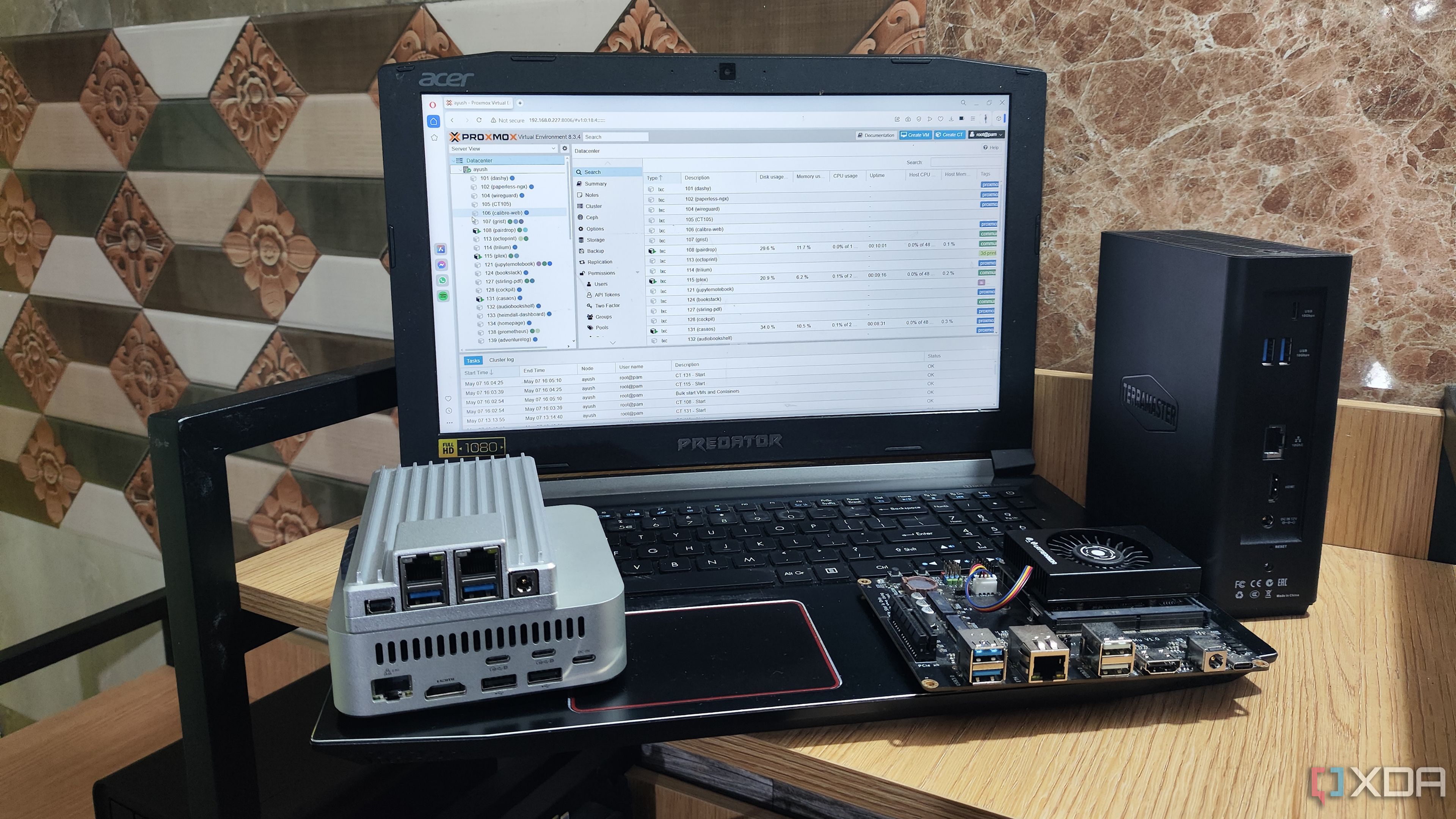

Although the ZimaBoard 2 is a fantastic machine for something that costs as low as $179, it’s still an SBC at the end of the day. Even leaving its 8GB memory aside, the SBC’s N150 processor gets pushed to the limit whenever I try to run multiple virtual machines at once. Clearly, I needed something with a bit more oomph to build an ESXi experimentation server.

That’s where nested virtualization comes in handy, as it lets me run virtual machines within VMs. Think of it like Inception but for home lab setups. Since Proxmox supports this functionality, I decided to bring my 24-core, 48-thread Xeon server back into the equation.

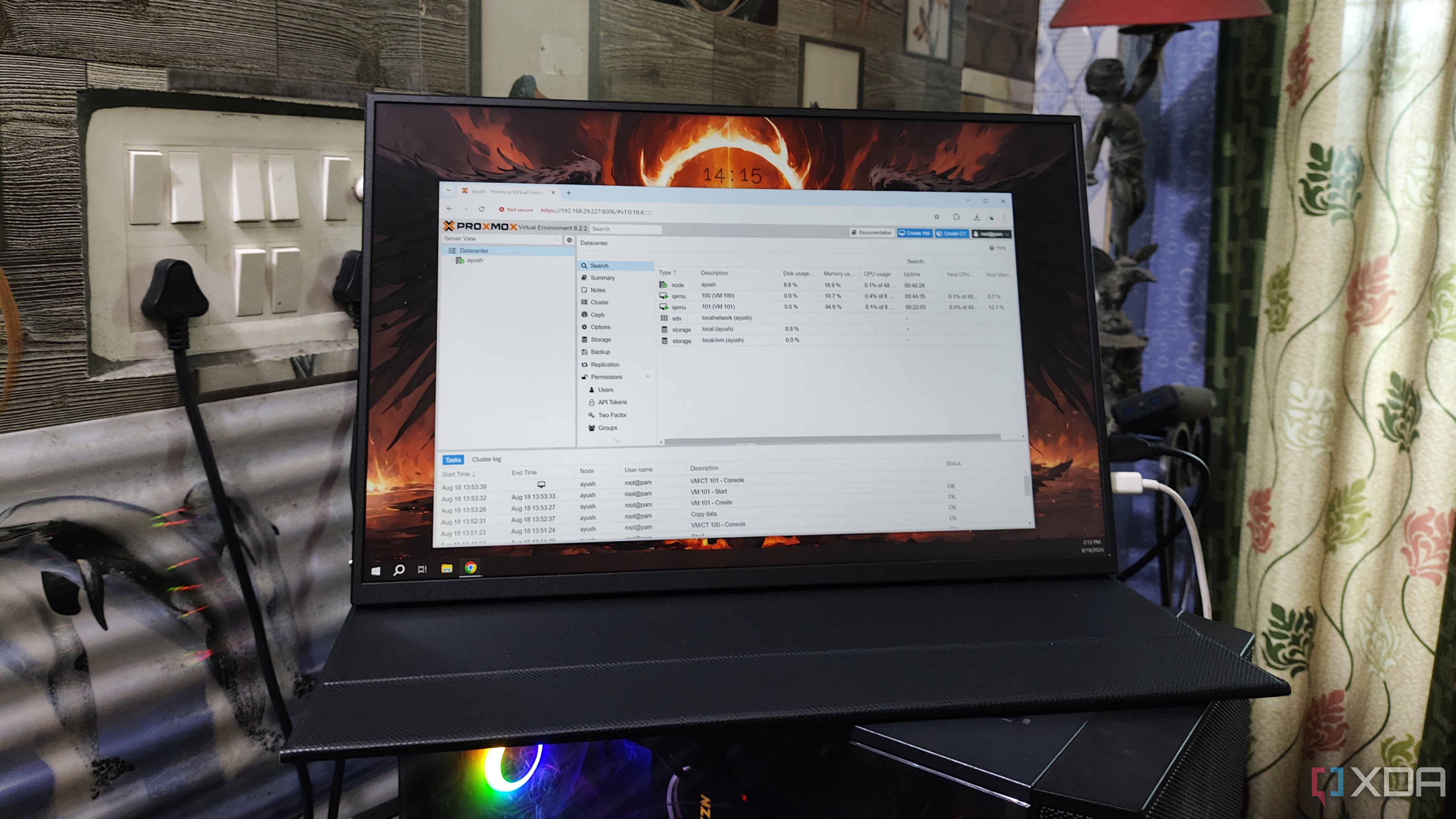

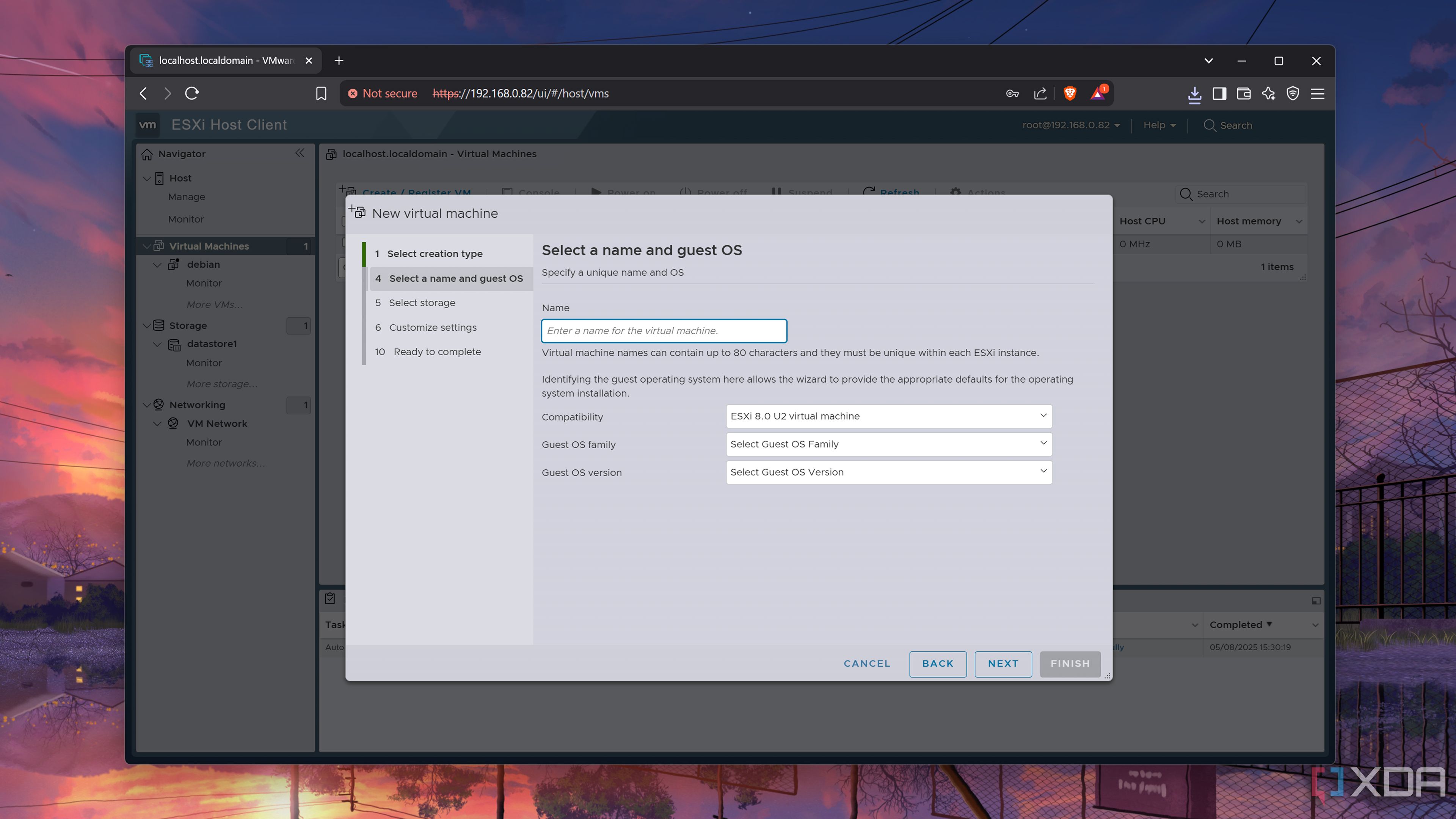

But first, I had to make some tweaks while creating a virtual machine using the VM creation wizard. After granting 8 v-cores and 8GB memory to the ESXi virtual machine, I switched its Storage Interface from SCSI to SATA. Likewise, I changed the Network Adapter from VirtIO to VMware ESXi. Since I wanted to use separate drives for the boot files and VM data, I allocated a second drive to the ESXi instance.

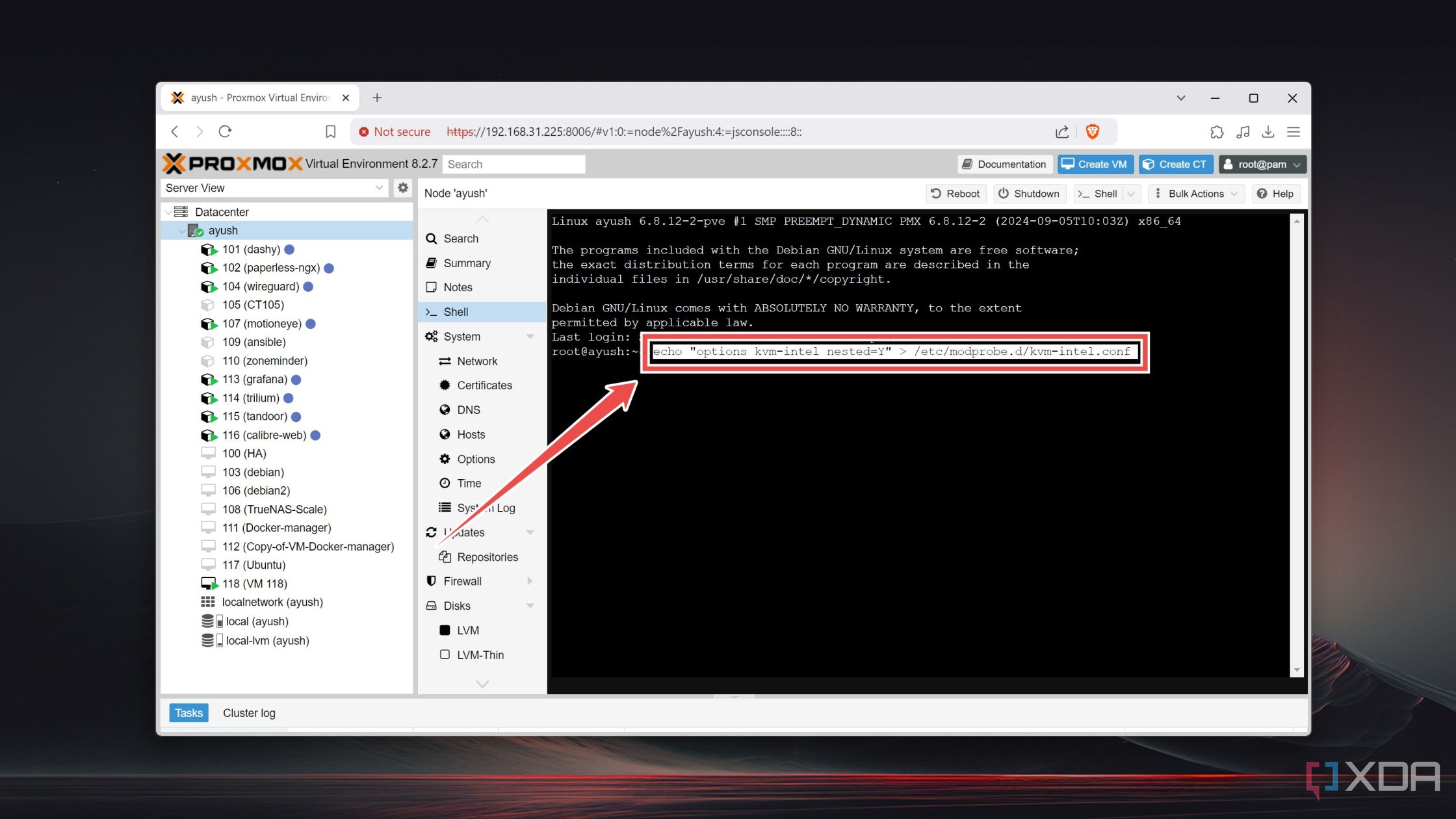

Before firing up the virtual machine, I ran the qm set 104 --cpu host command inside the Shell tab to grant nested virtualization privileges to the ESXi VM (with 104 being the number of the numerical ID of the virtual machine). If you’re following along with this article and haven’t previously set up nested virtualization, you’ll have to run the echo "options kvm-intel nested=Y" > /etc/modprobe.d/kvm-intel.conf command (echo "options kvm-amd nested=1" > /etc/modprobe.d/kvm-amd.conf for AMD CPUs).

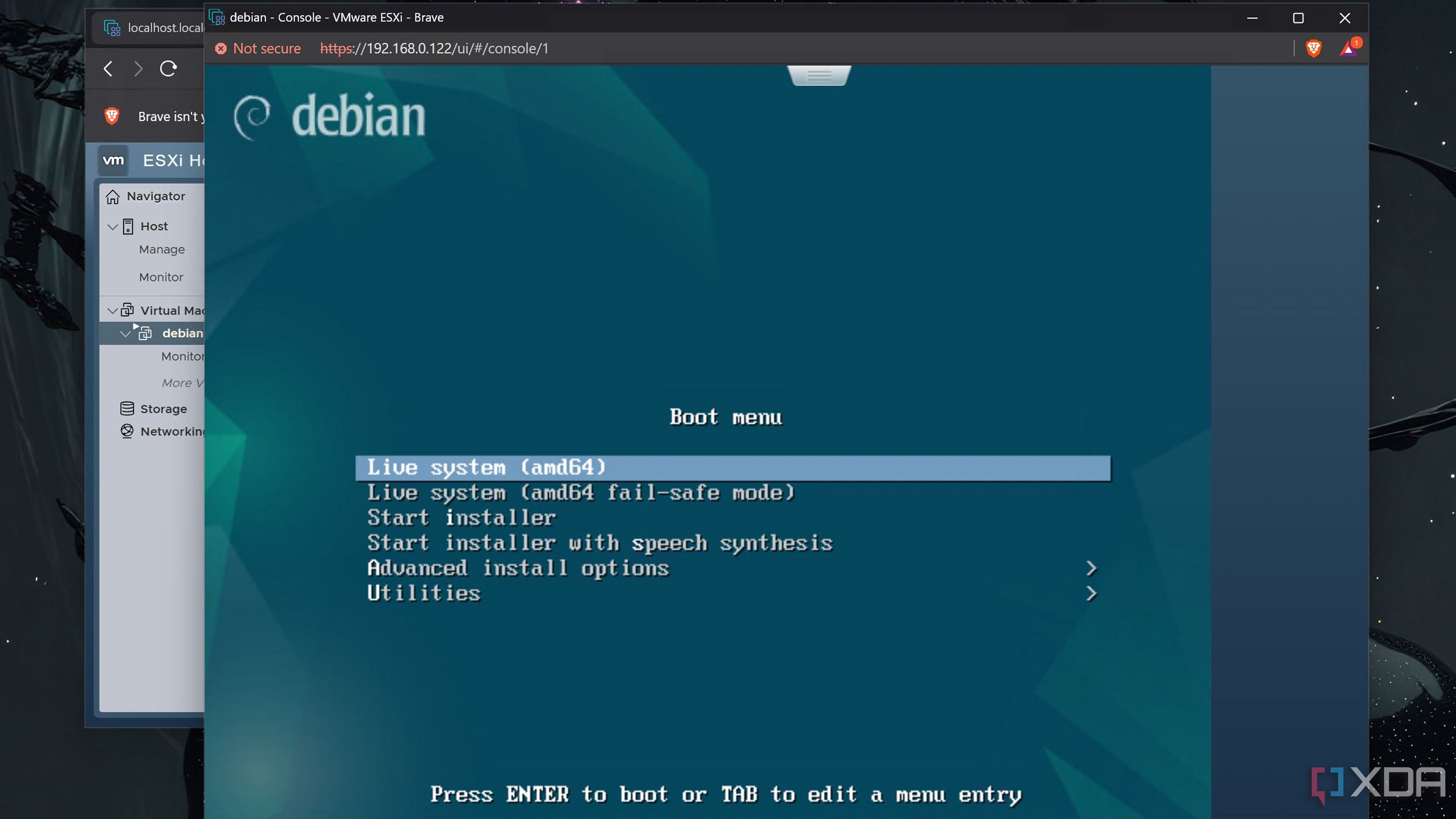

Once I booted the VM, it didn’t encounter any weird issues. The No Network Adapters error message was no longer visible, ironic considering that I was virtualizing ESXi on the very machine where it failed to detect the Ethernet drivers. Meanwhile, the virtual disks were immediately recognized by the wizard, and I proceeded with the rest of the installation sequence normally.

Performance is far better than I expected

Especially after allocating more resources to the ESXi VM

With the setup process complete, I rebooted the VM before granting the boot drive the highest startup priority. Soon, the VM displayed the IP address of the ESXi server, which I promptly fed into my browser to access the web UI.

After signing up with the root username and the password I’d set up earlier, I switched to the Storage tab and initialized the second virtual drive as the Datacenter before uploading a couple of ISO files to it.

Next, I used the New Virtual Machine wizard to create a Debian VM, and allocated 4 cores alongside 4GB of memory. Afterward, I mounted the Debian ISO as the CD/DVD drive and finally booted it up. Thanks to the power of nested virtualization, the virtual machine worked just fine. Even better than I’d expected, in fact, considering that I’m essentially running a VM inside another virtual machine.

Afterward, I allocated 32 v-cores and 32GB of memory to the ESXi virtual machine and tried running a couple of VMs simultaneously. Due to ESXi’s 8-core per VM limitation, I couldn’t go overboard with my CPU allocation. Other than that weird issue, I have zero complaints with the virtualized ESXi home lab.

Despite the weird premise, ESXi runs surprisingly well inside Proxmox

Although I wouldn’t switch to ESXi as my main home lab OS in a million years, it still has a lot of value in the enterprise market – with a significant fraction of data centers relying on VMware’s offerings. That makes it a crucial virtualization platform for any DevOps and sysadmin enthusiast, including myself.

Unless you’ve got a device that supports ESXi, deploying it inside a platform that supports nested virtualization is the best way to use it without wasting hours trying to solve hardware incompatibility issues. Bonus points if you use Proxmox, a platform that has rightfully replaced ESXi for many home labbers.

Related

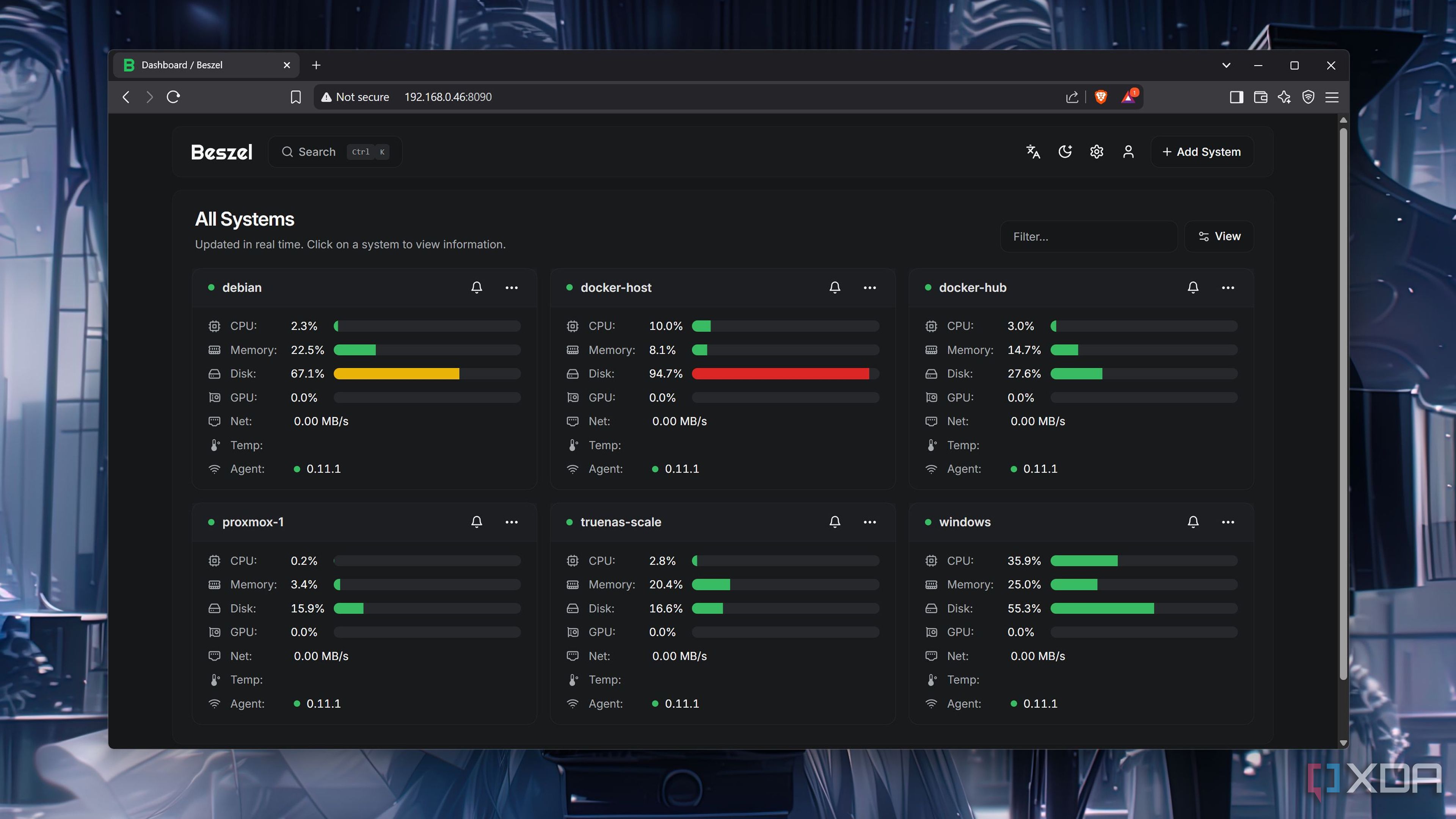

This free and open-source lightweight server monitor changed how I keep an eye on my home lab

Beszel has become my go-to utility for monitoring the server rigs, SBCs, NAS units, and mini-PCs in my home lab

.png)

English (US) ·

English (US) ·