Although data hoarders tend to shy away from SSDs due to their limited write cycles and terrible long-term storage feasibility, these ultra-fast drives serve as amazing boot drives for consumer PCs and home servers alike. As such, I favor SSDs over HDDs when installing bare-metal containerization environments, virtualization platforms, and server/NAS operating systems.

But unfortunately for me and my SSDs, certain services can end up degrading the drive health, especially in the case of hardcore server workloads. Since I rely on Proxmox for all my self-hosting tasks and computing experiments, I’ve taken a couple of precautions to ensure my boot drive remains in tip-top working order.

What causes SSD wear in Proxmox?

The iotop package reveals it all

Unlike their mechanical counterparts, SSDs are equipped with NAND flash cells. As you subject your high-speed drives to write operations, the oxide layer inside the flash cell wears out, leading to the entire limited write cycles hullaballoo. But even if you shift I/O heavy virtual machines to HDDs, Proxmox has a couple of underlying services that are infamous for excessively writing to the boot drive.

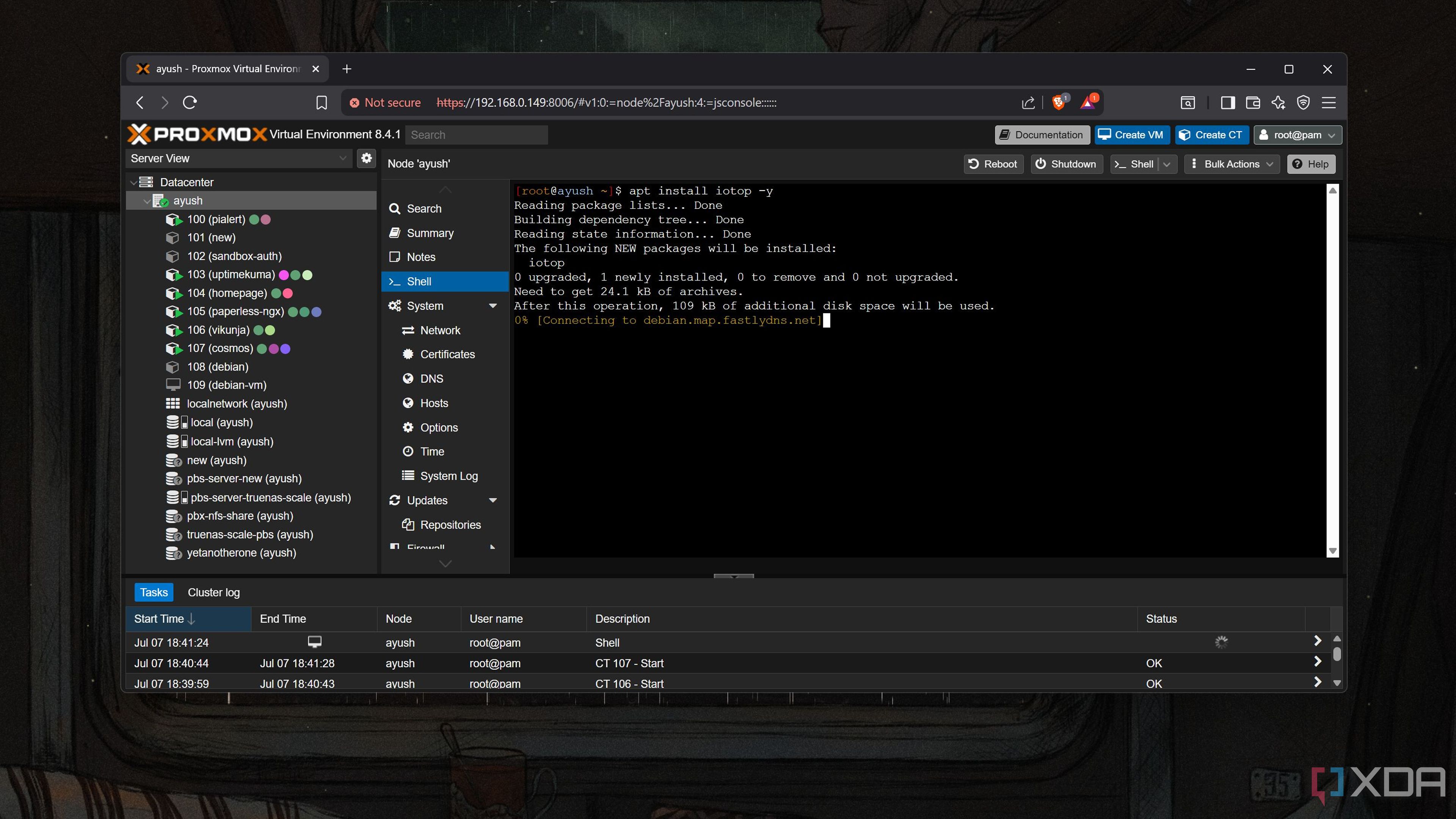

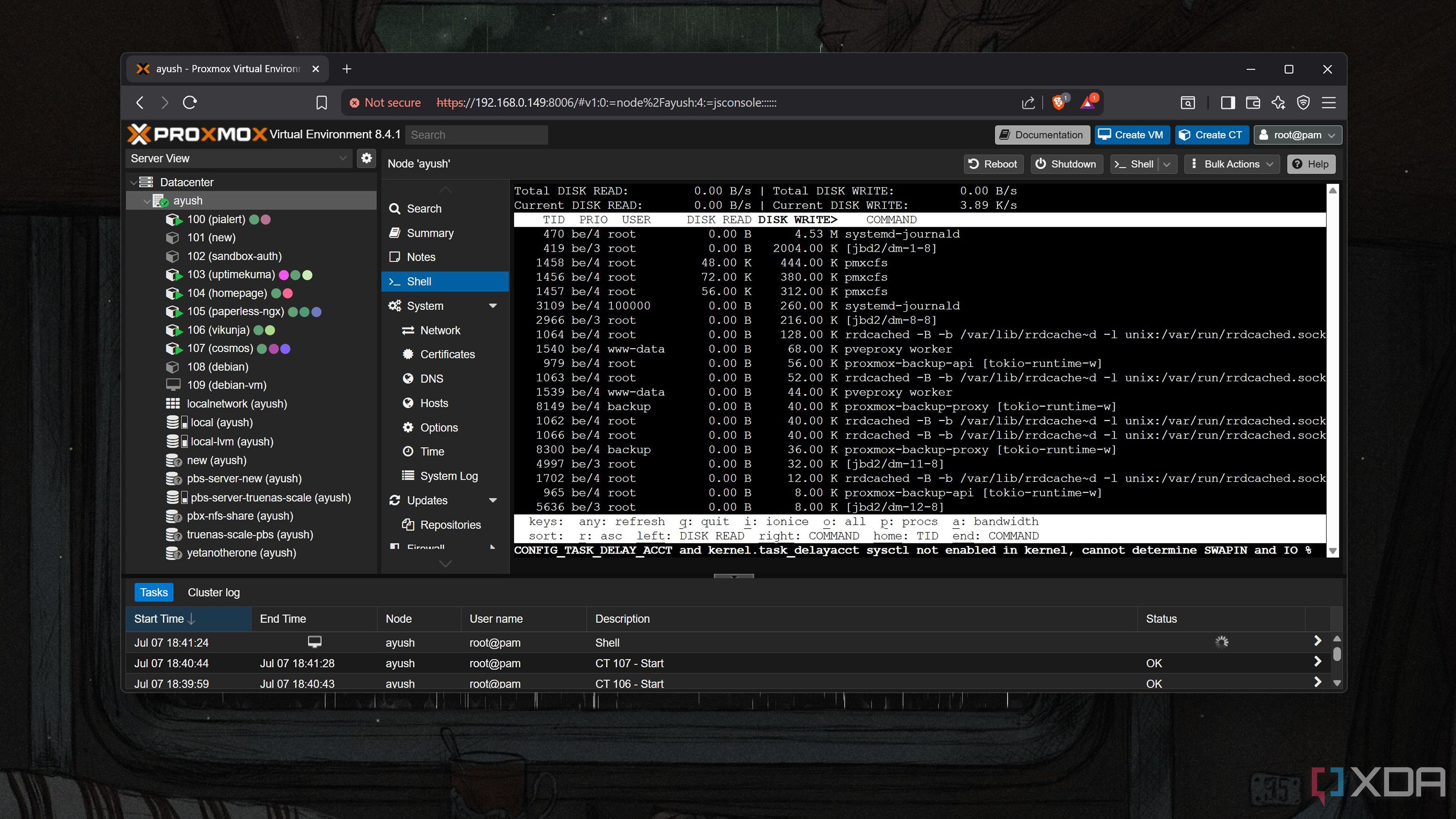

The iotop utility provides an easy way to check which services perform heavy write operations on a drive. Since Proxmox is built on Debian, all you have to do is execute the apt install iotop -y command in the Shell UI of your Proxmox node to install the tool before running iotop -ao.

On practically every setup, you’ll notice that the systemd-journald service and certain pve-cfs processes cause the most write operations on the SSD. The former is really important for troubleshooting (though there are ways to keep the damage under control), but it’s the latter service (or rather, a pair of services ) that we’re really here for.

Disabling the culprit services

It’s a solid fix if you don’t use a PVE cluster

As the first of the two offenders that cause excessive write operations, pve-ha-lrm.service is responsible for tackling resource allocation on the local PVE node. The second one, pve-ha-crm.service, manages the resources for the entire cluster, monitors the status of each node, and ensures that the high-availability setup continues to function as long as the minimum servers required to maintain a quorum remain operational.

Since these services are required for Proxmox clusters, you’ll want them enabled if you’re trying to build a high-availability configuration. But for home labbers who plan to stay on a single-node setup, you can disable them to prevent (or rather, considerably slow down) the wear rate of your boot drive.

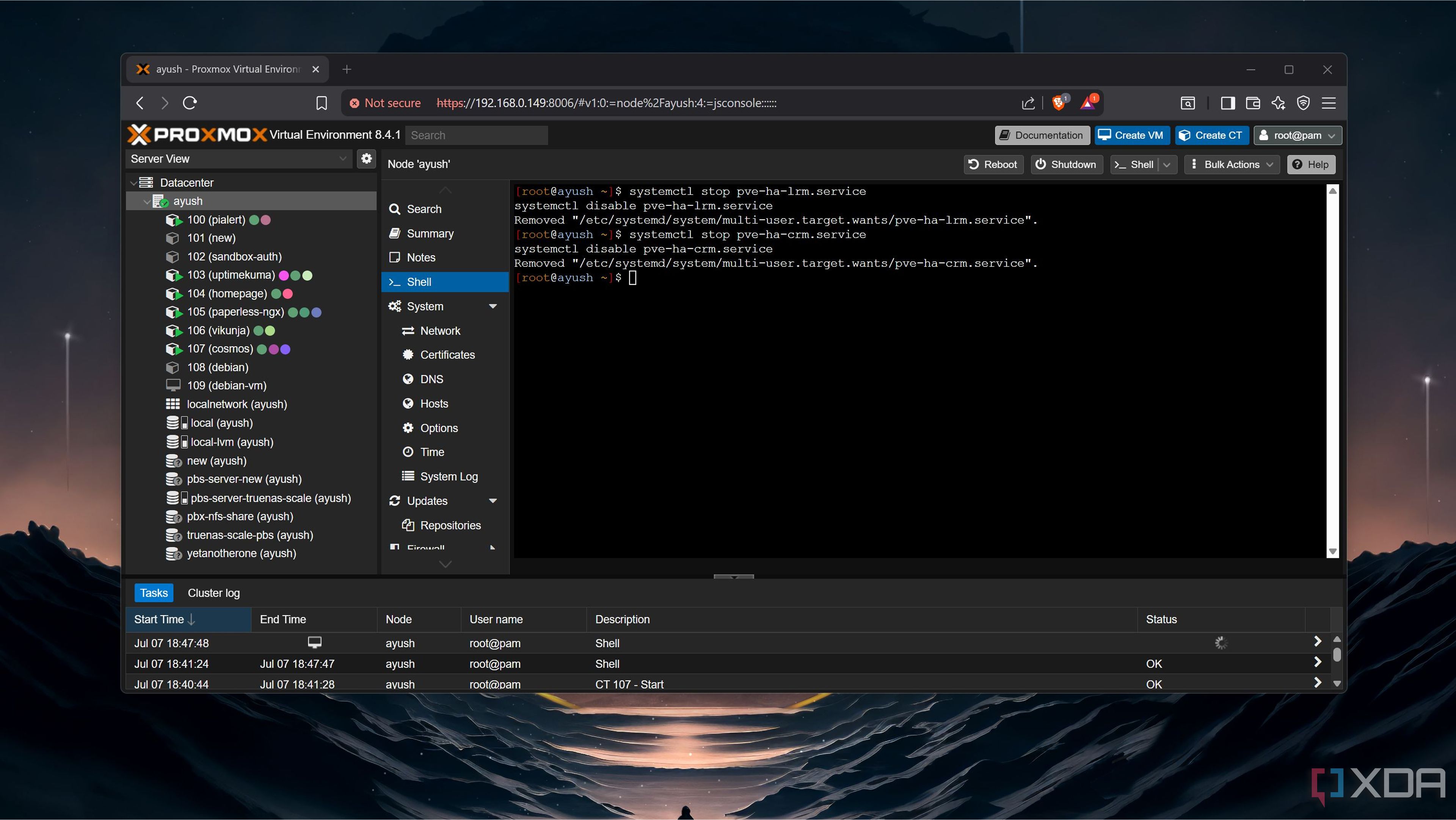

Doing so is fairly easy: just invoke the systemd manager via systemctl to stop each service before running the disable command to get rid of it.

- systemctl stop pve-ha-lrm.service

- systemctl disable pve-ha-lrm.service

- systemctl stop pve-ha-crm.service

- systemctl disable pve-ha-crm.service

Bonus: Optimizing log storage

Log2Ram is the perfect tool for the job

Remember the systemd-journald process we spotted earlier in iotops? Unlike the niche use case for the cluster services we disabled earlier, systemd-journald is responsible for logging system statistics. As someone who has used them countless times, I can confirm that these logs are lifesavers when you need to troubleshoot the PVE server after a failed experiment or an incorrectly configured file breaks some functionality of the Proxmox node.

If you’re using a really low-quality SSD, you could run the nano /etc/systemd/journald.conf command to open the journald.conf file and modify the Storage parameter such that it becomes Storage=volatile. This will force your PVE node to write the logs to the RAM and spare the SSD from the extra write operations. Unfortunately, the drawback here is that your logs will get flushed from memory when your server shuts down. In case of a system crash, troubleshooting will become extremely difficult, since you’ll have no means to find the actual fault with the server.

I suggest going the Log2Ram route instead. This neat package writes the system logs to memory before syncing them with SSD periodically, thereby providing the best of both worlds. Setting it up can take a few steps, though. Since Log2Ram isn’t included in the basic PVE repositories, you’ll have to manually add its repo before running the apt install command.

- echo "deb [signed-by=/usr/share/keyrings/azlux-archive-keyring.gpg] http://packages.azlux.fr/debian/ bookworm main" | tee /etc/apt/sources.list.d/azlux.list

- wget -O /usr/share/keyrings/azlux-archive-keyring.gpg https://azlux.fr/repo.gpg

- apt update && apt install log2ram -y

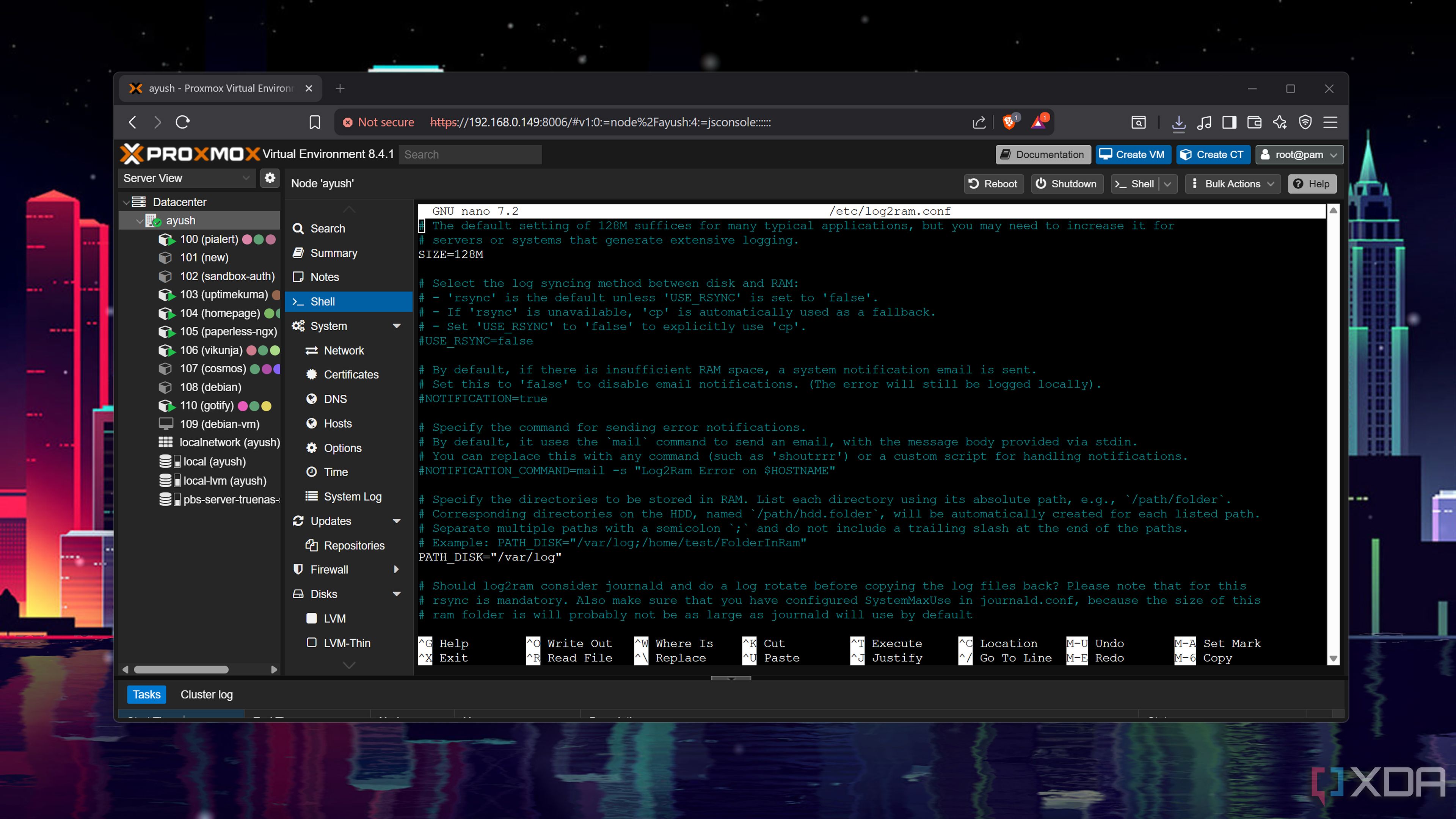

Once you’ve installed the package, remember to reboot your PVE node. If you’ve followed all the steps correctly, the systemctl status log2ram command should display Log2Ram as enabled. I’ve left my Log2Ram instance at default settings, but you can modify the log's SIZE and PATH_DISK parameters by running the nano /etc/log2ram.conf command within the Shell interface.

Never cheap out on the boot SSD — especially on Proxmox

The precautions I’ve listed here should prove useful for folks who rely on SSDs as the boot drives for Proxmox. However, low-quality drives from no-name manufacturers tend to get worn down a lot faster than their premium counterparts, even with these provisions in place. While I wouldn’t advise getting a top-of-the-line PCIe 5.0 SSD as just a PVE boot drive, I do recommend going with a semi-decent one to avoid headaches later down the line.

.png)

English (US) ·

English (US) ·