If you listen to big chipmakers like Intel, you'll constantly hear that they all want you to have an AI PC on your desk. They differ slightly in how they want AI to infuse your computer, but the NPU (a dedicated processor for specific AI workloads) is the driving force. Windows, macOS, and the apps that run on them all add AI-powered tools and features to use this new pint-sized powerhouse.

Out of all the chipmakers, you'd expect Intel to be ahead of the pack. After all, it's the only one with its own foundries to manufacture the chips it designs. But Chipzilla, as it's affectionately known, hasn't had the best few years, with Raptor Lake's design issues and the farming out of Arrow Lake instead of making the chips in-house.

So, that begs the question. If Intel wants everyone to have an AI PC, who's actually going to make the chips? Intel is slashing its internal divisions at a great rate of knots, with the automotive business the latest to feel the headsman's axe, as part of an expected 15-20% reduction in its global workforce. The top strategy officer is also gone, and Intel's future looks uncertain.

Related

5 things Intel needs to get right with Nova Lake

Intel needs to get the Nova Lake launch right to keep up with AMD.

What is an "AI PC" anyway?

It's all about useful AI that runs locally

We spoke to Intel's Robert Hallock not long ago, and he defined "AI PC" as "the requirement to get that name is that all of the accelerators in the device must have specific AI accelerating extensions." Think on-device acceleration, which is part of the instruction set in the CPU and GPU, plus the NPU, and which crucially supports the AI frameworks that are in use in the operating system.

Arrow Lake was among the first to include these instructions, but Meteor Lake and Lunar Lake focus more on NPU power. But they're far from the only game in town. AMD's Strix Point has a capable NPU, as do Qualcomm Snapdragon X Elite and Apple's M-chips. Wherever the CPU is coming from, know that the software industry is pushing for these AI-capable processors, and the hardware is necessary; otherwise, the entire system will suffer.

The vital thing to know isn't that AI is coming to your PC, because it's already there. It'll just get more, but having an NPU to handle those tasks will make the rest of your PC snappier, because the slower, multipurpose CPU cores aren't being used for the computationally heavy AI tasks. Nvidia might be the king of AI tasks in the datacenter and desktop, but NPUs will rule the roost for laptop users, which means a four-way scrap for supremacy between the CPU makers.

Related

4 reasons you shouldn't be dismissive of NPUs and AI PCs

While they're certainly being used as buzzwords, AI PCs and NPUs have a lot to offer

You won't be able to escape them

Every chipmaker is onboard and so is the software industry

Often, the hardware industry develops a new feature or set of instructions, and then software makers find ways to use them. This time around, the software industry is pushing for AI-capable hardware so it can stuff AI features into every piece of software you use.

No longer dubbed "machine learning" and used only in photo and video editing suites, AI now powers digital notetaking, Internet search, research and report making, and everything from fitness planners to travel itineraries. You can chat with your operating system as you work, or get AI agents to pull from multiple sources and turn the results into a podcast host where you can ask questions out loud.

I'm not sure if AI is the future, future, but it's the near future because that's what the industry wants it to be. It's poured untold billions into creating the tools and the models and the agents, and if nobody has the hardware to use it, then it all goes to waste. On the other side, the chipmakers have already seen the tools, and know that there is an audience when their AI chips are ready.

Even if Intel has to outsource manufacturing, the AI PC is here to stay

Credit: Intel

Credit: Intel

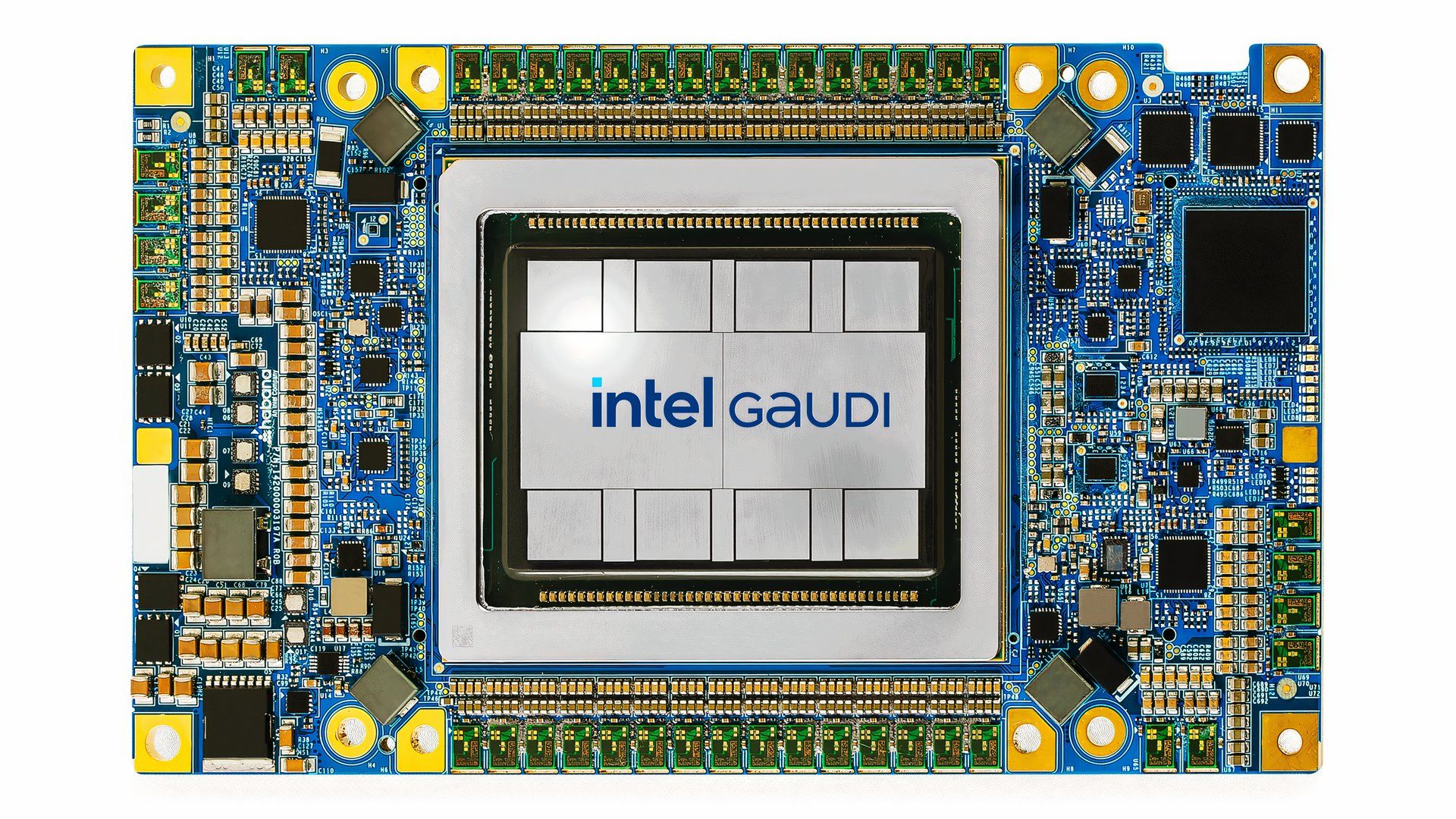

Whether it's AI accelerators for the datacenter or AI PCs for the office, AI is here to stay, at least for the short term. But one thing is telling: consumers would rather buy Raptor Lake CPUs than AI-enabled ones. You know, those Raptor Lake CPUs that were self-immolating with cranked voltages. It makes some sense, BIOS updates fixed the voltage issues, and Lunar Lake is significantly more expensive for laptop users.

But the datacenter also wants those generations of chips, and there isn't a corresponding Lunar Lake there, but there is the powerful AMD Epyc range, which might be more expensive than older CPUs. With a new CEO, Intel might turn things around, but I'm not sure cutting Foundry staff and engineers is the way to do it.

.png)

English (US) ·

English (US) ·