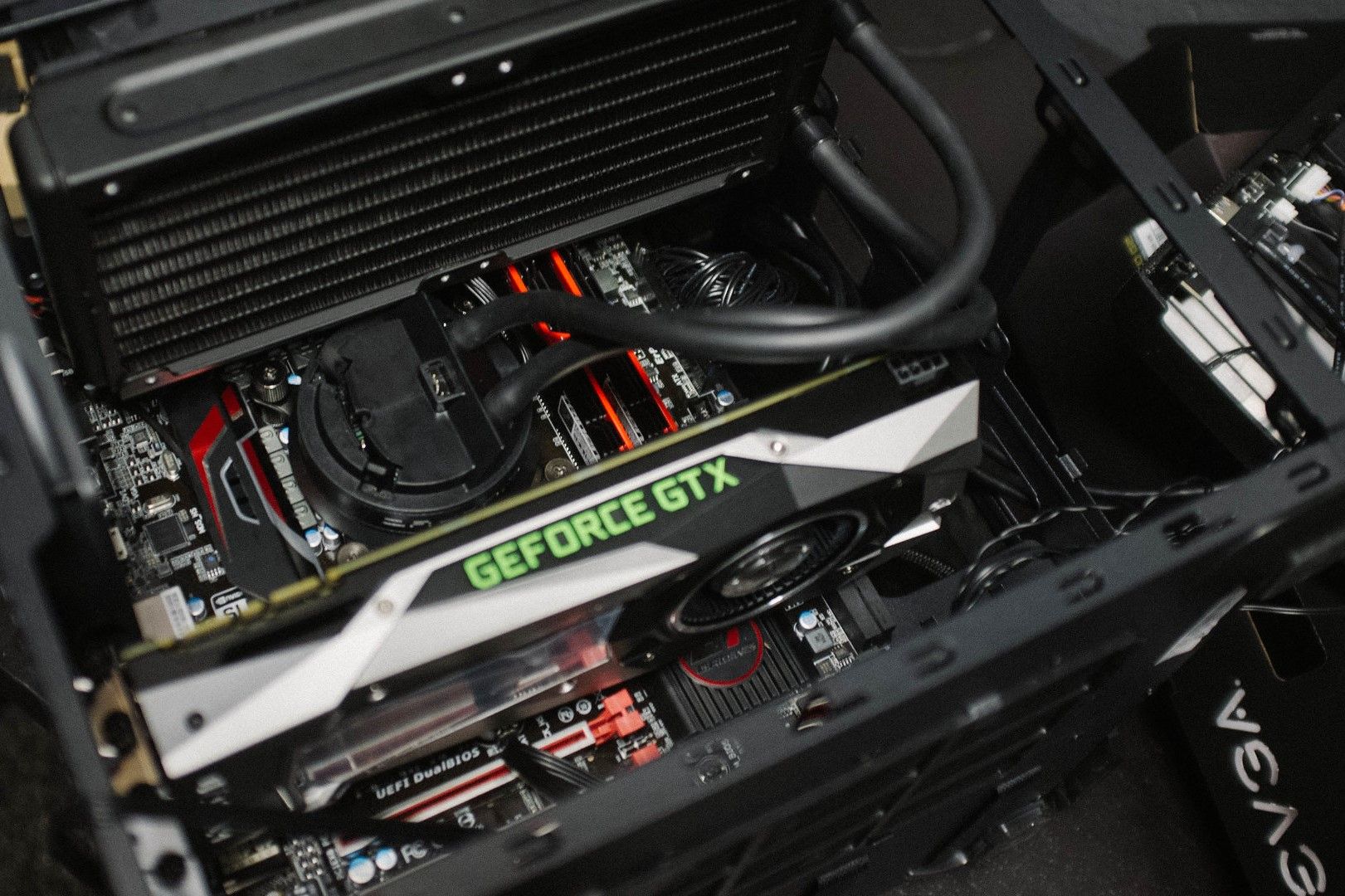

I've been running a TrueNAS system for nearly two years at this point, cobbled together out of old parts from previous builds and a desire to learn more about home servers and networking. I had been renting a dedicated Kimsufi box since 2016 or so, running an array of basic services, game servers for friends, and a personal Plex instance, but I wanted something that I controlled and could do what I wanted with. Armed with 24GB of DDR3 RAM, an AMD Ryzen 7 3700X, and a GTX 1070 Ti, I put together my first home server, and it's still the center of my home lab operation.

Recently, I was reflecting on the GTX 1070 Ti that I'm using and how impressive it is in terms of what it can do. Sure, it'll likely fail to even make a feeble attempt at 1440p gaming (let alone 4K gaming), but it's a pretty powerful card when paired with Jellyfin, Plex, or any other self-hosted media platform capable of hardware acceleration. Plus, it can even run basic local AI models with its 8GB of VRAM. It's not exactly going to outpace my RTX 4080 on that front, but for a card that can be found for $100 or less on the second-hand market, it's surprisingly decent, especially for transcoding media.

Related

Despite its terrible power efficiency, I refuse to part with my dual-CPU server

Although I prefer consumer-grade hardware in my home lab, I won't ditch my dual Xeon workstation

The GTX 1070 Ti just about managed to support all the major codecs we love today

Released in 2017, the GTX 1070 Ti had a surprisingly good level of support for decoding major video formats. While AV1 eludes it (as that only cropped up as a specification a year after), it's one of the few cards from that time period that can even support VP8, though that's a significantly more niche codec. Where the 1070 Ti truly shines is its support for H.265 4:2:0 decoding, also known as HEVC. The 1000 series cards were the first to support it, aside from a select few in the 900 series.

Of course, HEVC isn't without its controversy. It's been slowly overtaken by AV1 in many ways, and while it managed to improve significantly on the compression level of H.264 content, it suffered from more restrictive licensing and actually contributed to the growth of AV1 as a royalty-free codec through the Alliance for Open Media. In order to ship a product with HEVC support, you need to acquire licenses from at least four patent pools (MPEG LA, HEVC Advance, Technicolor, and Velos Media) as well as numerous other companies, many of which do not offer standard licensing terms and instead require you to negotiate terms.

These restrictions are worse than those that came with H.264, which Firefox only supported thanks to Cisco paying the licensing fees on behalf of Mozilla through OpenH264. Even now, HEVC support only came to Firefox in February 2025 by way of a workaround, as decoding is offloaded to the GPU (which is sold with a license to decode it), through the VA-API. Without this workaround, HEVC would still be missing from Firefox, and even with it, it's not as plug-and-play as it would be on Google Chrome.

With that aside, all of the other major expected formats are supported by the GTX 1070 Ti, too. There's MPEG-1 and MPEG-2, VP9, and, of course, H.264. Those particular capabilities aren't all too special, but it's HEVC that matters significantly here. Even when it comes to AV1, many devices natively support it, so while it's not transcodeable, I can still stream AV1 content from my server to a client device using Jellyfin, so long as I'm playing the source file and don't need any transcode capabilities.

It doesn't have a particularly hefty power draw, either

It's perfect for a home server

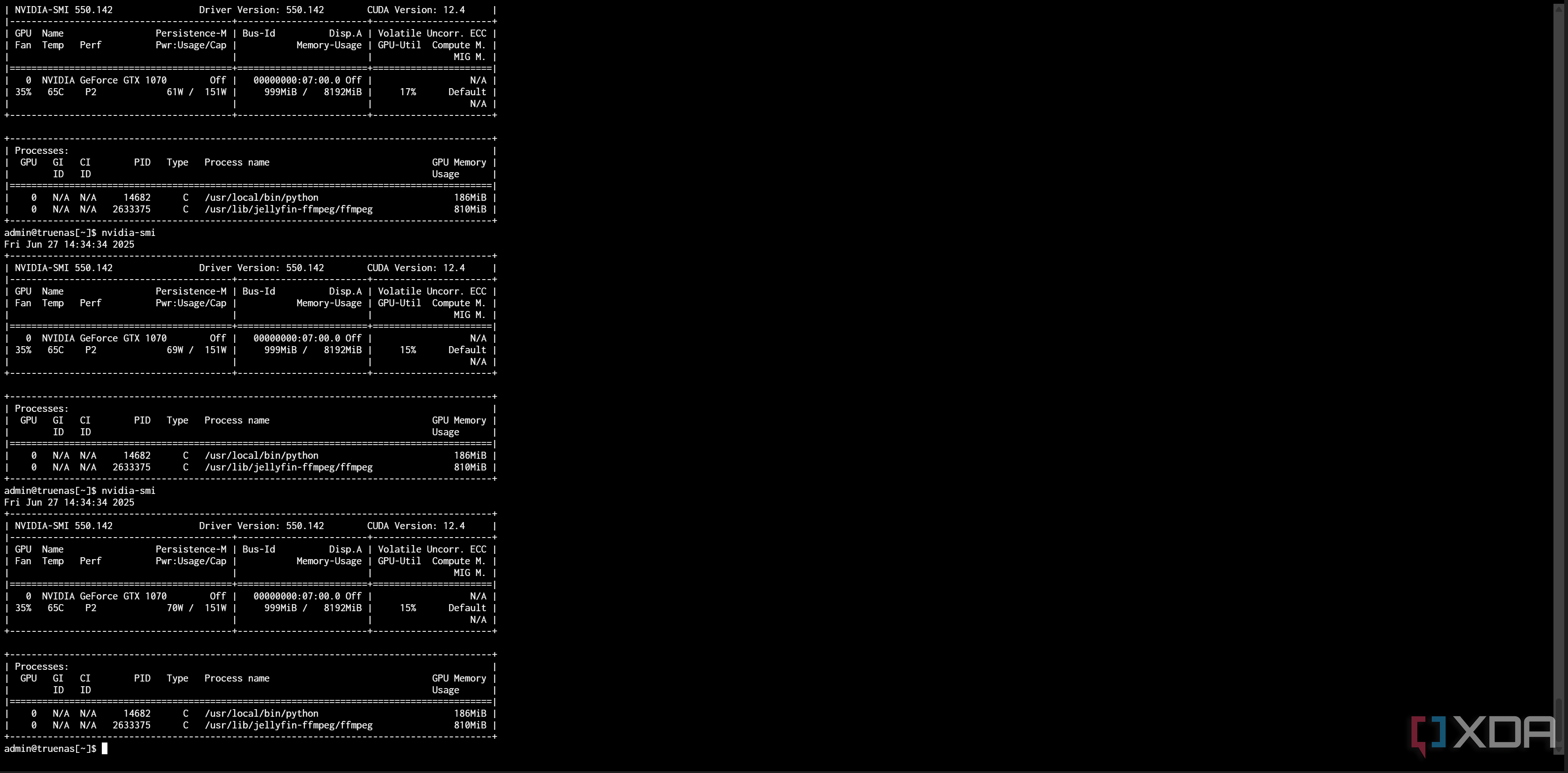

One of the other great aspects of the GTX 1070 Ti is its idle power draw. Right now, according to nvidia-smi, it's pulling a mere 9W of power, and my entire server is idling at 78W at the time of writing. That's with a Ryzen 7 3700X running my containers that are always active, like Nextcloud, Elasticsearch, and my CouchDB linked to Obsidian. Using nvidia-smi, I can see that GPU power usage jumps between 60W and 70W when transcoding a HEVC movie using Jellyfin, but that's when taking a 54 Mbps bitrate 4K movie and transcoding it to 20 Mbps. Depending on the input to be transcoded, this can be a lot lower.

For context, an RTX 3080 could idle at as much as 45W under these conditions (though likely significantly lower than that), and power usage when transcoding would be similar. In other words, I could do the same stuff that I do now, but I'd be using more energy for that and also need more space for a bigger card. The downside is that an RTX 3080 would enable me to do more by way of local LLMs, so it's not all positives for the GTX 1070 Ti, but it's a surprisingly capable card for what it would cost to get one today and what you might need it for in a home server.

Even now, the capabilities of these older cards are still pretty apparent. I recently configured a server for someone I was working with as a means to centralize their CCTV processing through Frigate. The server they opted to use packs a GTX 970, an even older card, yet it works just fine for six cameras with TensorRT and consumes between 50W and 180W of power depending on what's happening at any given moment. To be clear, that means it's analyzing video feeds, detecting people and other specified objects, taking snapshots, and recording. It's certainly not the most powerful system and would struggle with the addition of extra cameras, but for a home surveillance system, even a GPU more than a decade old has held up surprisingly well.

If you're building a home server and need a GPU, one of these older cards can be a great investment if you need it. Integrated GPUs are also surprisingly powerful (and even more energy efficient) through the likes of Intel's QuickSync and comparable technology on AMD, but if you don't have an integrated GPU and don't want to go out and buy a new CPU just to have one, an old GPU lying around or found on the second-hand market can do wonders. It's powerful enough for most video formats, can do more than just video, and won't consume a lot of extra power when it's just sitting there.

For Jellyfin transcoding, I've been immensely happy. Anything with a local LLM is a gamble, but otherwise, it's more than powerful enough for practically anything I'd want to do on my home server. Even having the ability to plug in a monitor and see what's going on with my server, if it's not booting, has been a major plus. If you don't have integrated graphics, an old GPU can be a great, affordable investment.

.png)

English (US) ·

English (US) ·