As my home network has grown and the number of services I decide to self-host increases, so does the dependency on those services. It's the trade-off between the privacy of having my data firmly on devices I own, and other companies' servers in the cloud, and it comes with a host of added responsibilities. I'm okay with that, but it does mean that I need to find ways to ensure high availability of those services because I'm not the only person in my household, and outages are now my problem.

This means I've now got a high availability Proxmox cluster, so those VMs have less chance of going down, but it's still a single point of failure in terms of network connectivity. And then I ran into Keepalived, which uses the virtual router redundancy protocol (VRRP) to share multiple servers or services on a single virtual IP address, and provides a failover method if one of them goes down.

It's a fantastic tool for building a more resilient network and works almost seamlessly. And for the record, yes I could use HAProxy for a similar failover and get load balancing, but what keeps HAProxy from going down? Ah, right, another instance of Keepalived. I'll stick to the simple fixes that I know, at least at this stage.

Related

5 services I self-host on my Raspberry Pi instead of my main home server

My primary home server handles a lot, so it's important to delegate these tasks to my Raspberry Pi farm

5 For my DNS servers

Technitium is powerful, but until it has clustering, I need Keepalived as well

Self-hosting a DNS server is a rewarding process that gives your devices, network, and personal information some much-needed privacy at a time when we should expect anything we do on the internet to be tracked and monetized in some way. I'm not saying we should accept that's how it should be; it is what it is, and we should take preventative measures instead. My colleague Adam has two Pi-hole servers for this very reason, so that if one goes down, the other takes over and his network is kept protected.

I do something similar, but with Technitium, a fully featured DNS server that you can self-host. Its roadmap has clustering on the horizon, so at some point, I'll be able to connect several instances together with the inbuilt settings, but that day is in the future. Until that happens, I've got Keepalived on my NAS and on a Proxmox-powered mini PC that lets me use one virtual IP to access both Technitium instances. The only thing different to get it working was to add the following on the servers, then bind Technitium to both host IP and VIP IP in Keepalived, and add the VIP IP to the DNS Server Local End Points setting in Technitium.

net.ipv4.ip_nonlocal_bind=14 Because my smart home is important

Home Assistant shouldn't have a single point of failure

I use Home Assistant to automate my smart home and bind the multiple ecosystems I've collected over the years into a cohesive mass. This works fantastically well when the VM living on my NAS is reachable by the rest of my network, but sometimes the connection to that device drops. You wouldn't accept Google Home or HomeKit's servers going down for any appreciable length of time, and I sure don't accept my Home Assistant doing the same.

But with Keepalived, I don't have to. I can keep that VM as the main server to route requests to and have a Docker container with HA on the device that has my Docker compose stack, which also has a Keepalived component to check if the VM is running. With a subscription to Home Assistant Cloud I get backups so I can easily keep parity between the two HA instances, and the few things that the Docker container can't run aren't essential services for me, so it's okay if they're unavailable for short periods until I can reboot the NAS or troubleshoot the connectivity.

Related

I moved my Home Assistant from TrueNAS to a mini PC running Proxmox, and I'm so glad I did

I moved my Home Assistant from TrueNAS to Proxmox, and it's saving me energy, time, and it's just all around better.

3 So my reverse proxy never goes down

Access is everything in the home lab

My reverse proxy is the gateway to my stacks of self-hosted services, making it an essential part of my network architecture and a single point of failure if anything goes wrong. Most of my services are on Ubuntu or Debian-based servers, including Proxmox, because those are what I know and I want to keep the learning to the network architecture that I'm less familiar with. Whether I'm using Nginx or HAProxy as the reverse proxy, they're both easy to link up with Keepalived, and all I need is three IP addresses, and to remember to change the priority of the two VRRP instances so that one is higher than the other.

$ sudo iptables -I INPUT -p 112 -d 224.0.0.18 -j ACCEPT$ sudo iptables -I INPUT -p 51 -d 224.0.0.18 -j ACCEPT

The first time I set this up, everything looked correct, so I couldn't figure out what was going wrong. Then I found another tutorial that mentioned adding firewall rules for vrrp and ah (authentication) to pass through, which is trivial in Ubuntu.

2 So my VPN never goes down

Having your services go down when you're at home is bad enough

Remote access to my network and home lab is essential to my architecture. But that carefully crafted chain of connections is useless if the server running OpenVPN goes down. If I'm using OpenVPN for any reason, I have it running on two servers, with them bound to the VIP IP in Keepalived and their own IP, which makes the secondary server work as the main when the link goes down for any reason.

That means my VPN server is always available. And if the worst happens and two servers on my home network go down, I have bigger issues than being unable to log in remotely. I could do something similarwith Pangolin, but I'd still have the same issue with the IP addresses Pangolin is mapped to going down. At least, unless I run Keepalived on all of those services as well. I think eventually I'll have synced app servers behind two HAProxy instances and Keepalived so that everything has the best chance of high uptime, but that's a bit in the future as I plan future purchases.1 To easily manage my Proxmox cluster

HA failover is too slow, and virtual IPs are clutch

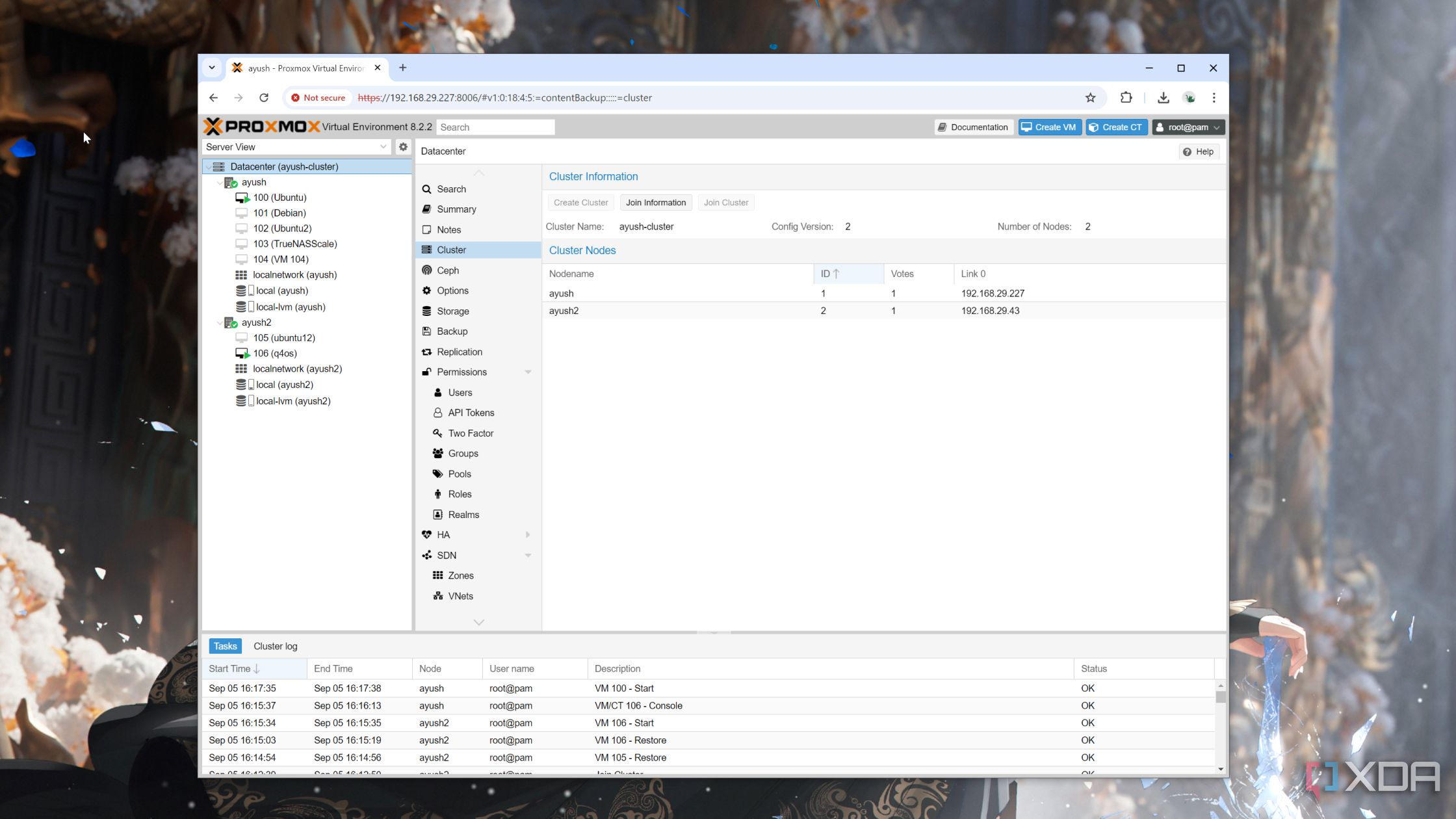

Setting up a HA Proxmox cluster is a fantastic way of ensuring your containers and VMs are always available to users, but what about when you need to do administration tasks on the Proxmox nodes? If one is down, you need to know which one so you can use one of the other nodes, but it's my opinion that admin tasks should be set up so they're as frictionless as possible.

Setting up Keepalived to access the cluster with a single virtual IP means less time wasted when I need to change something. It'll always log into the active node, which is nice and easy. It might only be a few seconds every time I have to log in, but that adds up over time, and I will be using this cluster for a long time.

Related

This is how you build a Proxmox cluster

Why restrict yourself to a single node when you can build an entire cluster from your Proxmox servers?

I'd run Keepalived on everything (if I could)

I've also recently moved my firewall to OPNsense, and Keepalived doesn't quite work in that situation. That's only because OPNsense has CARP built in, so you can quickly make a cluster with failover, without any additional tools. If it didn't, I'd have to figure out how to set failover up, plus sync settings between the two firewalls. Ideally I'd love every service or server I run to have native clustering so that I didn't need additional installations, but Keepalived will do the job until then.

.png)

English (US) ·

English (US) ·