Docker is for almost everyone, really. As an enthusiastic home lab user, it changed the way I install and use apps without any guilt. This volition only mustered up after toiling over containers, which many claimed were easy to deploy and use. The invisible gap existed since I took everyone’s word for granted and didn’t pay attention to the basics. I got blinded by the lure of easy installation, better isolation, and portability. My run-ins with containers in the past few months made me realize the things I wish I had known before using Docker.

6 A Docker container is not a Virtual Machine

They're different despite a few similarities

When I used Docker for the first time, I mistakenly thought it was a virtual machine. Though the mechanism felt familiar, I discovered my blunder on a closer look. While Docker operates in a sandboxed and isolated environment, it still accesses the kernel and very few system resources. Unlike a full-blown OS, a Docker container is lightweight, boots faster, and runs independently without interfering with other apps on a machine.

No wonder they spin up in a few seconds compared to VMs, which take a while. A container ropes in the supported services or dependencies and eliminates the need to add anything to it separately. That said, Docker only isolates the apps, while a virtual machine handles the virtualization of apps and environments on different systems. When I realized that, I calibrated my expectations accordingly.

5 Containers don’t always adhere to a one-size-fits-all approach

You can use them, but the architecture does matter

In my home lab, I mostly put containers on a Raspberry Pi to check what’s new. It surprised me when the Obsidian’s container wouldn’t run on Pi. Investigating that revealed that one of its listed dependencies (KasmVNC) wasn’t working on the arm64 architecture. This exception recalled the infamous “works on my machine” problem, and surprised me, since Docker is all about consistency and reliability. However, I learned that not all containers are built the same, and the supported architecture does matter at times.

For example, I could run x86 containers on ARM-based computers only after defining proper emulation. But that only works when the relevant container with dependencies and libraries is built accordingly. That’s why I no longer sheepishly use the latest modifierin a container image’s address.

4 More than one way to deploy containers

You can choose the geeky way or the convenient one

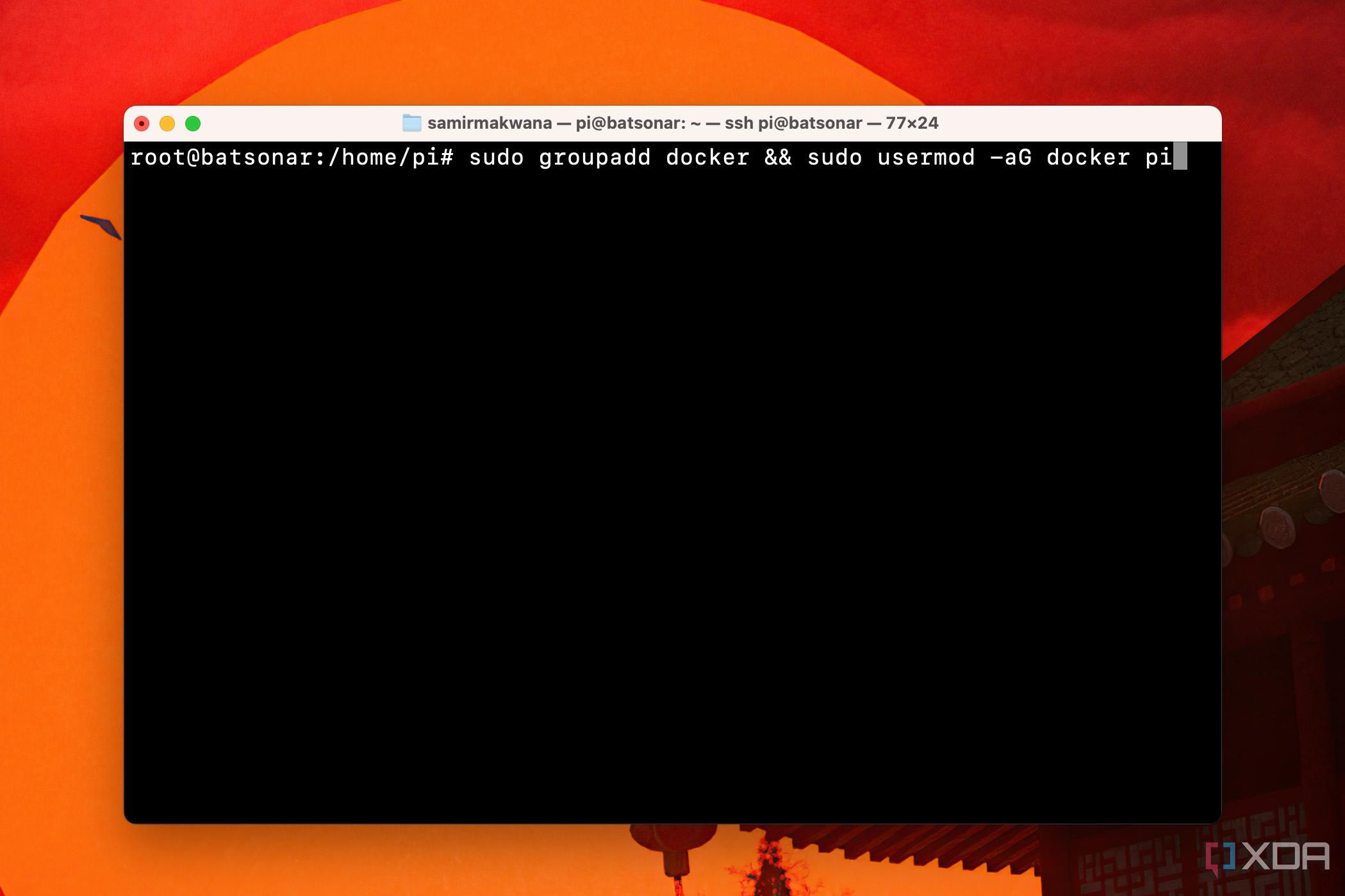

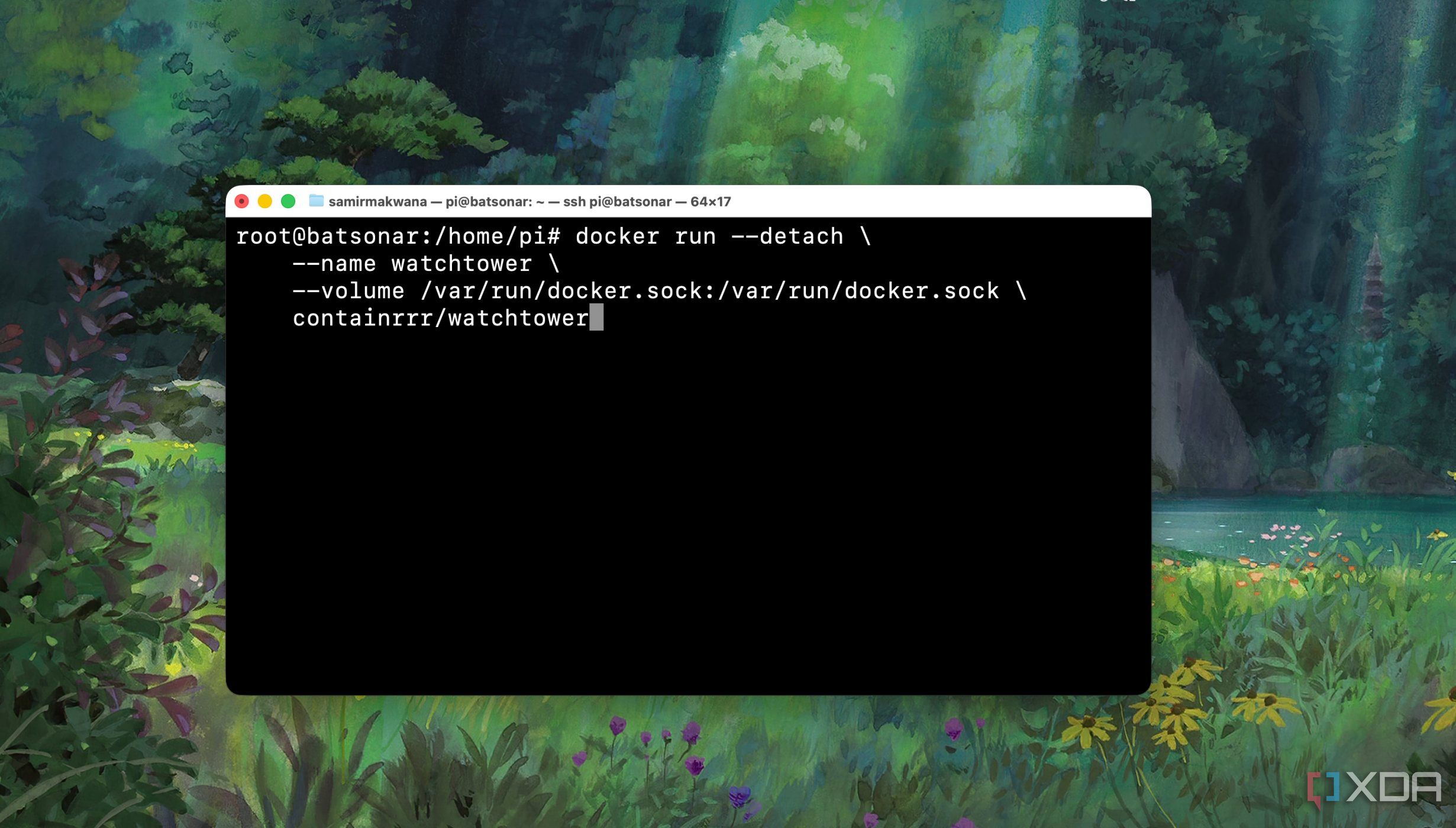

Docker commands conveniently let me run containers from Terminal, and I’m often left confused when nothing happens. That’s because editing a long Docker run command’s syntax is a struggle, and I often goof up on the indentation. Even when I used a text editor to personalize some configurations in commands, they’d often fail to execute.

That’s why I feel more comfortable using Docker Compose, thanks to the practice of tinkering with YAML config files. Working with a text editor like Sublime Text. It doesn't take me long to populate a YAML file. Though Docker Compose is ideal for configuring and running several containers at a time, I employ it to configure single containers.

3 Customizable values while deploying containers

Watch and adjust the code closely

Several projects on GitHub have included Docker commands and Docker Compose code in the installation methods. As a beginner, I would copy and try to run them, only to be disappointed. Over time, I picked up customizing certain values to match the objective and nature of the container. For instance, defining the user and group who have access to a particular container helped skip giving sudo privileges to said account.

In most cases, only the values before a colon in a script or command are customizable. However, some values can be universally defined, like Timezone. Setting the internal folders to save the container data and configuration files is tricky. But they help save persistent data even if the container crashes.

2 Networking between containers can be tricky

Making containers talk to each other is tough

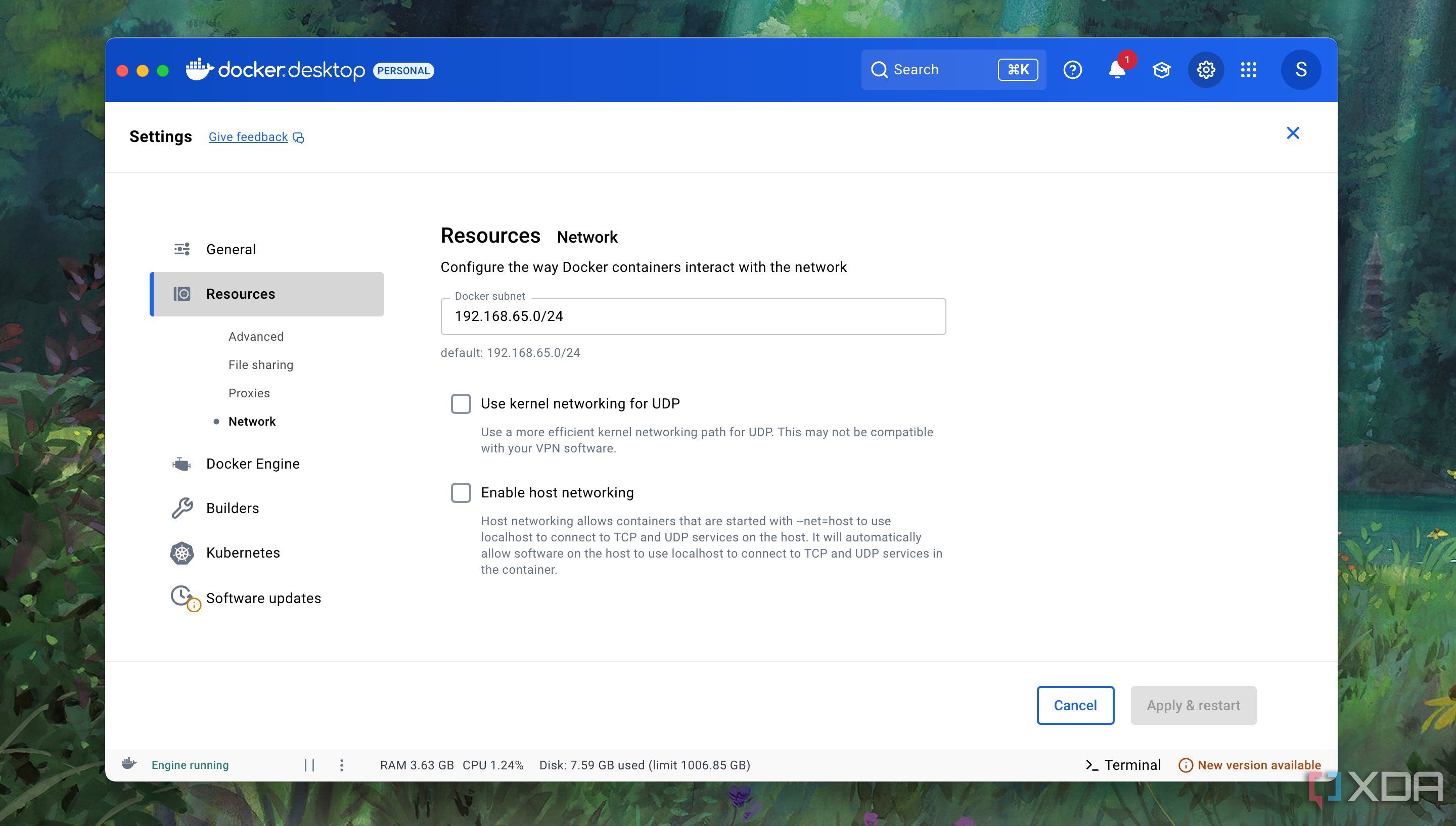

While deploying containers using the Docker command line, I assumed that they would communicate with each other. But that's not the case. Each container deployed using the docker run command operates on a default bridge network. Those containers can talk to each other using IP addresses, but cannot discover other containers by hostname on the same bridge network.

To overcome this limitation, I began using the Docker Compose YAML file to automatically connect the containers to the same network. Additionally, it enables the discovery of services by name on the shared network. So, I create isolated networks for specific apps when I don’t want to deal with IP addresses individually, mostly because they change whenever the containers restart.

View, deploy, and manage containers easily

The Docker desktop app is a delight in desktop environments on Windows, macOS, and Linux. Dealing with a VPS (Virtual Private Server) to manage my blog made the headless approach a breeze. Naturally, that inspired me to use Docker from the command line on Raspberry Pi or other SBCs. When I missed the graphical interface for the containers, I tried Podman and Portainer to manage containers.

Juggling containers in my home lab gives a taste of what it's like to use them in a professional, enterprise-like setup. Next, I’ll dabble with Docker Swarm to manage my containers and ensure all runs without a hiccup.

Minding the nuances can amplify containerizing thrills

Looking back, I realize that I learned these things the hard way, but you don't have to. Despite my low coding skills, I eagerly self-host and try out a bunch of them, if only I had known the containerization nuances. I would have saved a lot of time troubleshooting Docker containers. With the help and suggestions from the coworkers and forums, I confidently use Docker on all possible platforms. Whether just starting out or still considering Docker, I’d firmly recommend focusing on the fundamentals to save time and run some of the best self-hosted apps as containers.

.png)

English (US) ·

English (US) ·