Over the last couple of months, I’ve reviewed several Network-Attached Storage enclosures and tinkered with multiple NAS-centric distributions. As such, I keep a separate set of Seagate IronWolf drives and Crucial SSDs just so I can subject the storage enclosures and NAS operating systems to a series of tests. Unfortunately, this leaves me with a few drives for my home lab experiments, and I’m often on the prowl for cheap deals on storage disks.

Despite my desire to grab budget-friendly drives, I’d steered clear of refurbished HDDs. After all, hard drives have a limited lifespan, and using a pre-owned drive in a NAS is akin to playing with fire. However, I recently saw a couple of refurbished drives retailing for dirt cheap on Amazon, and decided to bite the bullet.

Now that I’ve tested them and used them for over a week, I wouldn’t recommend choosing them for storing essential files. But they’re a surprisingly decent option for everyday file-sharing operations and are an inexpensive way to create redundant copies of backup data.

My HDD testing methodology

And choosing a PC for the tests

As someone who grew up in an age where malware was distributed like hot cakes, I took some precautions before sliding the drives into my NAS. Since I wasn’t keen on testing the drives on my daily driver, I brought my Ryzen 5 1600 system out of retirement and installed Debian on it. Afterward, I armed the OS with smartmontools and e2fsprogs to check their S.M.A.R.T. statistics and scan them for bad blocks.

As for the drives, I’d initially ordered two refurbished drives from the same company – though I had to wait a couple of days before grabbing the second one as it was out of stock by the time I’d ordered the first HDD. Unfortunately, the second drive seemed entirely different from the first one, and I expected them to have different specifications. Unfortunately, this means I won't be able to use them in the same ZFS array on TrueNAS Scale.

And yes, I unplugged the Ethernet cable from the system before sliding the drives in. Call me paranoid if you will, but I refuse to expose the test PC to the Internet unless the disks have been thoroughly wiped clean.

The tests were more-or-less what you’d expect

Unfortunately, the two drives had completely different specs

Since I wanted to get the S.M.A.R.T. tests out of the way first, I ran the smartctl -i /dev/sda (sdb, for the second drive) command inside the terminal. As expected, the drives had entirely different specifications. The first one was a Seagate Pipeline HDD with a 5900 RPM, while the latter was a Toshiba model that could spin at a higher 7200 RPM. I also ran the smartctl -A /dev/sda and smartctl -A /dev/sdb commands to check their S.M.A.R.T. values.

Sure enough, the refurbished drives had seen their fair share of usage during their prime, with the second drive displaying high numbers in the total head writes field, but nothing too concerning. However, the numbers weren’t that concerning, since the firm that refurbished the drives included a 2-year warranty period.

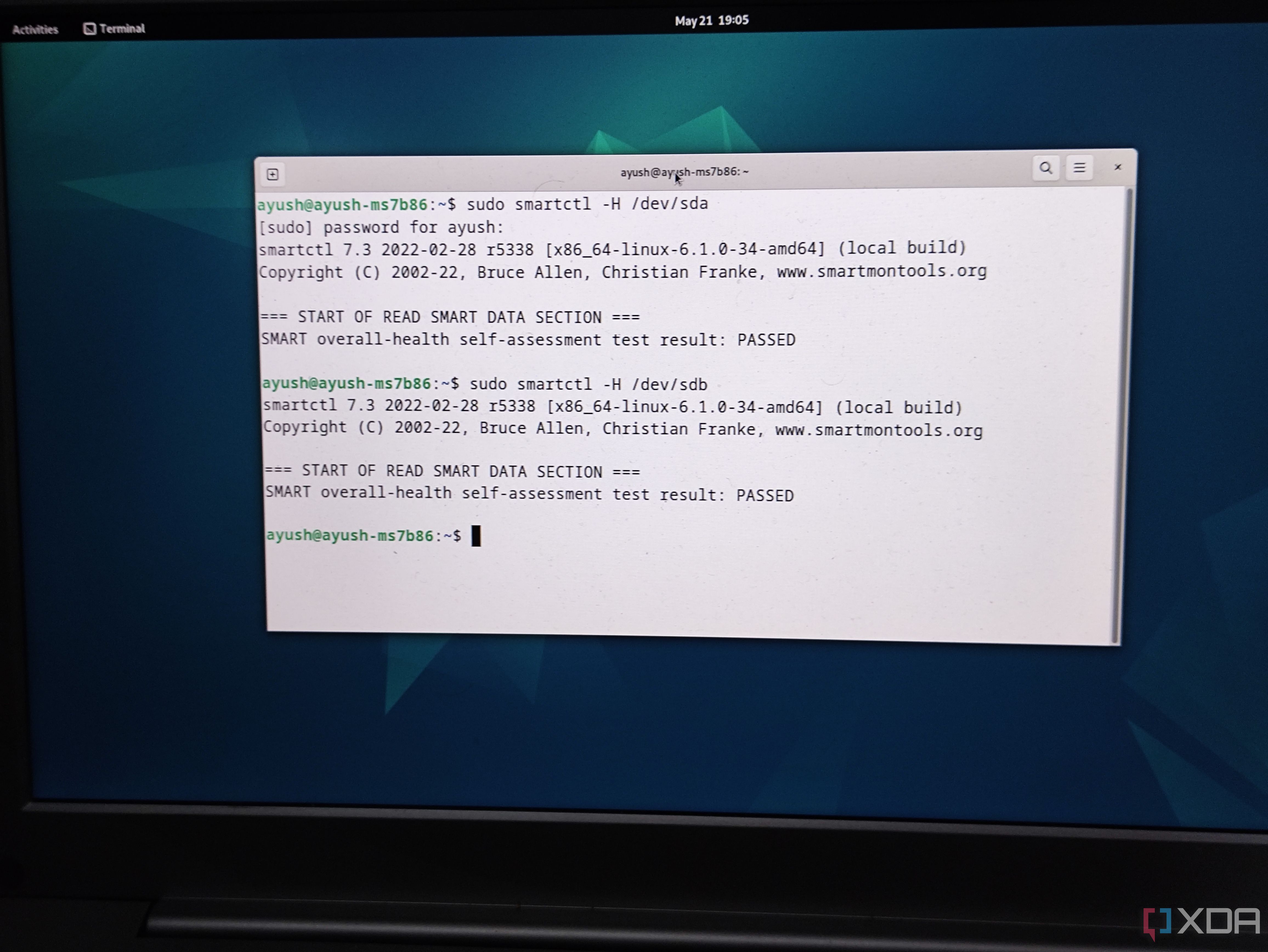

But the skeptic inside me wouldn’t rest just yet. So, I ran a long disk health check using the smartctl -t long /dev/sda and smartctl -t long /dev/sdb commands. Due to its 7200 RPM, the Toshiba drive was fully scanned in an hour, while the 5900 RPM Seagate HDD took almost twice as long. Running the smartctl -H command revealed that the drives were healthy enough to be used in my NAS, though I had one last test I wanted to run.

Since bad blocks are quite common in used drives, I put them through the grinder by executing the badblocks -b 4096 -c 65535 -wsv /dev/sda > $disk.log command. Once again, the 7200 RPM Toshiba drive completed the test long before its slower companion. But to my surprise, both drives displayed zero errors throughout the process.

Afterward, I slotted them into my TrueNAS Scale system, created a separate ZFS pool for each drive, and ran some tests via the Data Protection tab, which they passed handily. For the final round of tests, I slotted them into my main system and ran CrystalDiskInfo (just in case I missed some metrics in the admittedly hard-to-read Linux terminal) and CrystalDiskMark tests on the drives.

Picking the right use case for the drives

After all, I don’t want to risk losing important data

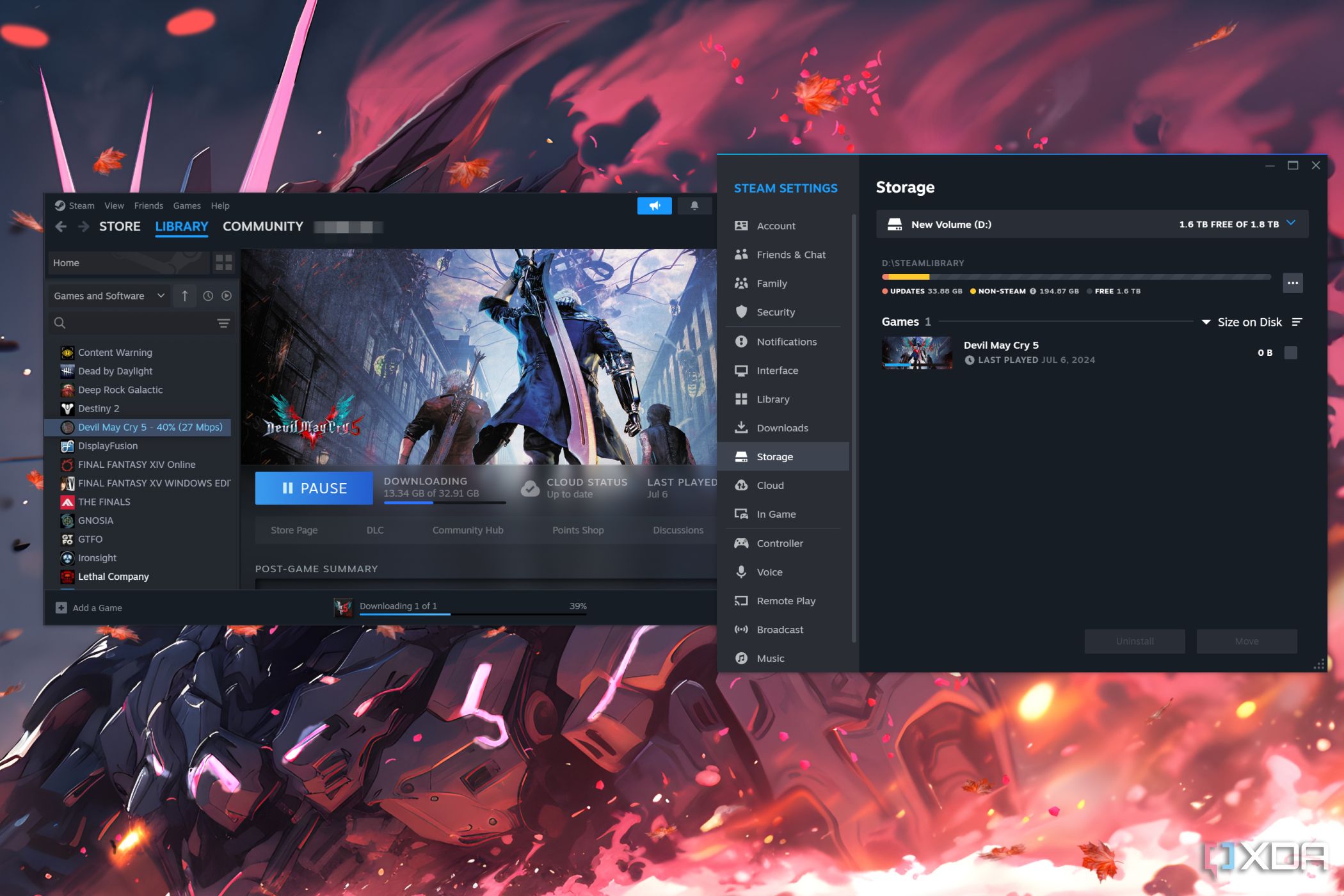

Once I’d confirmed the drives were operational, it was time to test the HDDs in some real-world workloads. For the Seagate drive, I figured I should use it in a file-sharing SMB share that also doubles as a secondary backup (as in, a backup of a backup) for my VirtualBox VMs. Meanwhile, I used the 7200 RPM drive to store some of the lesser-played games from my Steam library.

And well, here we are, two weeks after I began this experiment. So far, the drives have worked pretty well, and if I didn’t know any better, I wouldn’t have guessed they were refurbished models.

Truth be told, I wasn’t expecting this project to turn up so well, and had expected at least one of the drives to throw checksum errors by now. Nevertheless, I would never really use them as the primary HDDs in my ZFS pool, even with a parity-based RAID setup in place. But these cost-effective drives are bound to be useful in my DIY projects. For instance, I could pair them with my Raspberry Pi Frigate server to store surveillance footage. A file-sharing server isn’t a bad idea either. Since I often work with multiple devices, I could create a separate partition on the Toshiba drive and use it to store the ISO files in my PXE server.

.png)

English (US) ·

English (US) ·